Before we Start

Overview

Teaching: 25 min

Exercises: 15 minQuestions

How to find your way around RStudio?

How to interact with R?

How to manage your environment?

How to install packages?

Objectives

Install latest version of R.

Install latest version of RStudio.

Navigate the RStudio GUI.

Install additional packages using the packages tab.

Install additional packages using R code.

What is R? What is RStudio?

The term “R” is used to refer to both the programming language and the

software that interprets the scripts written using it.

RStudio is currently a very popular way to not only write your R scripts but also to interact with the R software. To function correctly, RStudio needs R and therefore both need to be installed on your computer.

To make it easier to interact with R, we will use RStudio. RStudio is the most popular IDE (Integrated Development Environment) for R. An IDE is a piece of software that provides tools to make programming easier.

Why learn R?

R does not involve lots of pointing and clicking, and that’s a good thing

The learning curve might be steeper than with other software, but with R, the results of your analysis do not rely on remembering a succession of pointing and clicking, but instead on a series of written commands, and that’s a good thing! So, if you want to redo your analysis because you collected more data, you don’t have to remember which button you clicked in which order to obtain your results; you just have to run your script again.

Working with scripts makes the steps you used in your analysis clear, and the code you write can be inspected by someone else who can give you feedback and spot mistakes.

Working with scripts forces you to have a deeper understanding of what you are doing, and facilitates your learning and comprehension of the methods you use.

R code is great for reproducibility

Reproducibility is when someone else (including your future self) can obtain the same results from the same dataset when using the same analysis.

R integrates with other tools to generate manuscripts from your code. If you collect more data, or fix a mistake in your dataset, the figures and the statistical tests in your manuscript are updated automatically.

An increasing number of journals and funding agencies expect analyses to be reproducible, so knowing R will give you an edge with these requirements.

R is interdisciplinary and extensible

With 10,000+ packages that can be installed to extend its capabilities, R provides a framework that allows you to combine statistical approaches from many scientific disciplines to best suit the analytical framework you need to analyze your data. For instance, R has packages for image analysis, GIS, time series, population genetics, and a lot more.

R works on data of all shapes and sizes

The skills you learn with R scale easily with the size of your dataset. Whether your dataset has hundreds or millions of lines, it won’t make much difference to you.

R is designed for data analysis. It comes with special data structures and data types that make handling of missing data and statistical factors convenient.

R can connect to spreadsheets, databases, and many other data formats, on your computer or on the web.

R produces high-quality graphics

The plotting functionalities in R are endless, and allow you to adjust any aspect of your graph to convey most effectively the message from your data.

R has a large and welcoming community

Thousands of people use R daily. Many of them are willing to help you through mailing lists and websites such as Stack Overflow, or on the RStudio community. Questions which are backed up with short, reproducible code snippets are more likely to attract

Not only is R free, but it is also open-source and cross-platform

Anyone can inspect the source code to see how R works. Because of this transparency, there is less chance for mistakes, and if you (or someone else) find some, you can report and fix bugs.

Because R is open source and is supported by a large community of developers and users, there is a very large selection of third-party add-on packages which are freely available to extend R’s native capabilities.

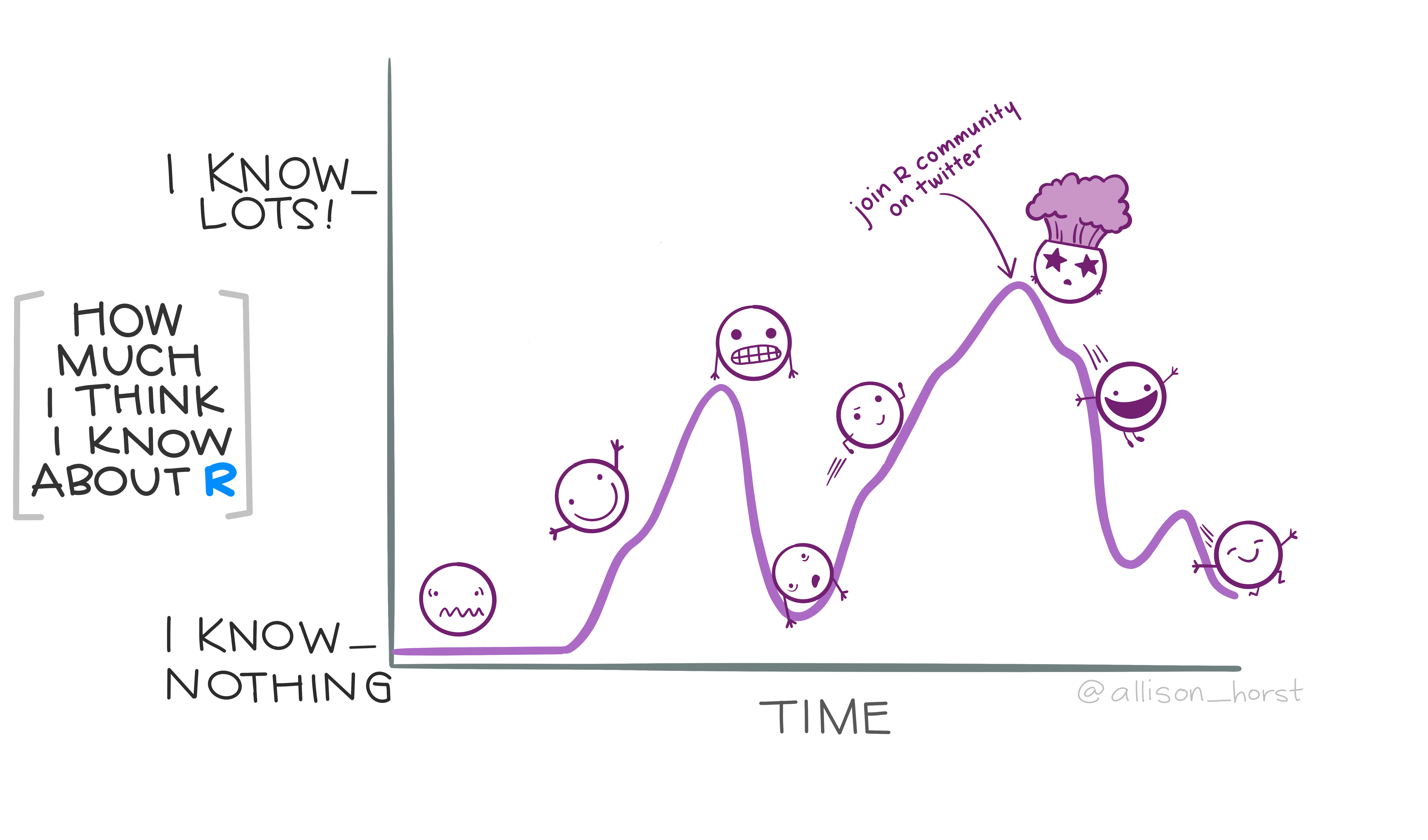

RStudio extends what R can do, and makes it easier to write R code and interact with R. Artwork by @allison_horst.

Key Points

Use RStudio to write and run R programs.

Use

install.packages()to install packages (libraries).

Introduction to R

Overview

Teaching: 30 min

Exercises: 10 minQuestions

What data types are available in R?

What is an object?

How can values be initially assigned to variables of different data types?

What arithmetic and logical operators can be used?

How can subsets be extracted from vectors?

How does R treat missing values?

How can we deal with missing values in R?

Objectives

Define the following terms as they relate to R: object, assign, call, function, arguments, options.

Create objects and assign values to them in R.

Learn how to name objects.

Save a script file for later use.

Use comments to inform script.

Solve simple arithmetic operations in R.

Call functions and use arguments to change their default options.

Inspect the content of vectors and manipulate their content.

Subset and extract values from vectors.

Analyze vectors with missing data.

Creating Objects in R

You can get output from R simply by typing math in the console:

3 + 5

[1] 8

12 / 7

[1] 1.714286

However, to do useful and interesting things, we need to assign values to

objects. To create an object, we need to give it a name followed by the

assignment operator <-, and the value we want to give it:

weight_kg <- 55

<- is the assignment operator. It assigns values on the right to objects on

the left. So, after executing x <- 3, the value of x is 3. For historical reasons, you can also use =

for assignments, but not in every context. Because of the

slight

differences

in syntax, it is good practice to always use <- for assignments.

In RStudio, typing Alt + - (push Alt at the same time as the - key) will write ` <- ` in a single keystroke in a PC, while typing Option + - (push Option at the same time as the - key) does the same in a Mac.

Objects can be given almost any name such as x, current_temperature, or subject_id. Here are some further guidelines on naming objects:

- You want your object names to be explicit and not too long.

- They cannot start with a number (

2xis not valid, butx2is). - R is case sensitive, so for example,

weight_kgis different fromWeight_kg. - There are some names that cannot be used because they are the names of fundamental functions in R (e.g.,

if,else,for, see here for a complete list). In general, even if it’s allowed, it’s best to not use other function names (e.g.,c,T,mean,data,df,weights). If in doubt, check the help to see if the name is already in use. - It’s best to avoid dots (

.) within names. Many function names in R itself have them and dots also have a special meaning (methods) in R and other programming languages. To avoid confusion, don’t include dots in names. - It is recommended to use nouns for object names and verbs for function names.

- Be consistent in the styling of your code, such as where you put spaces, how you name objects, etc. Styles can include “lower_snake”, “UPPER_SNAKE”, “lowerCamelCase”, “UpperCamelCase”, etc. Using a consistent coding style makes your code clearer to read for your future self and your collaborators. In R, three popular style guides come from Google, Jean

Fan and the

tidyverse. The tidyverse style is very comprehensive and may seem overwhelming at first. You can install the

lintrpackage to automatically check for issues in the styling of your code.

Objects vs. Variables

What are known as

objectsinRare known asvariablesin many other programming languages. Depending on the context,objectandvariablecan have drastically different meanings. However, in this lesson, the two words are used synonymously. For more information see: https://cran.r-project.org/doc/manuals/r-release/R-lang.html#Objects

When assigning a value to an object, R does not print anything. You can force R to print the value by using parentheses or by typing the object name:

weight_kg <- 55 # doesn't print anything

(weight_kg <- 55) # but putting parenthesis around the call prints the value of `weight_kg`

weight_kg # and so does typing the name of the object

Now that R has weight_kg in memory, we can do arithmetic with it. For

instance, we may want to convert this weight into pounds (weight in pounds is 2.2 times the weight in kg):

2.2 * weight_kg

We can also change an object’s value by assigning it a new one:

weight_kg <- 57.5

2.2 * weight_kg

This means that assigning a value to one object does not change the values of

other objects. For example, let’s store the animal’s weight in pounds in a new

object, weight_lb:

weight_lb <- 2.2 * weight_kg

and then change weight_kg to 100.

weight_kg <- 100

What do you think is the current content of the object weight_lb? 126.5 or 220?

Saving Your Code

Up to now, your code has been in the console. This is useful for quick queries

but not so helpful if you want to revisit your work for any reason.

A script can be opened by pressing Ctrl + Shift +

N.

It is wise to save your script file immediately. To do this press

Ctrl + S. This will open a dialogue box where you

can decide where to save your script file, and what to name it.

The .R file extension is added automatically and ensures your file

will open with RStudio.

Don’t forget to save your work periodically by pressing Ctrl + S.

Comments

The comment character in R is #. Anything to the right of a # in a script

will be ignored by R. It is useful to leave notes and explanations in your

scripts.

For convenience, RStudio provides a keyboard shortcut to comment or uncomment a paragraph: after selecting the

lines you want to comment, press at the same time on your keyboard

Ctrl + Shift + C. If you only want to comment

out one line, you can put the cursor at any location of that line (i.e. no need

to select the whole line), then press Ctrl + Shift +

C.

Exercise

What are the values after each statement in the following?

mass <- 47.5 # mass? age <- 122 # age? mass <- mass * 2.0 # mass? age <- age - 20 # age? mass_index <- mass/age # mass_index?

Functions and their Arguments

Functions are “canned scripts” that automate more complicated sets of commands

including operations assignments, etc. Many functions are predefined, or can be

made available by importing R packages (more on that later). A function

usually takes one or more inputs called arguments. Functions often (but not

always) return a value. A typical example would be the function sqrt(). The

input (the argument) must be a number, and the return value (in fact, the

output) is the square root of that number. Executing a function (‘running it’)

is called calling the function. An example of a function call is:

weight_kg <- sqrt(10)

Here, the value of 10 is given to the sqrt() function, the sqrt() function

calculates the square root, and returns the value which is then assigned to

the object weight_kg. This function takes one argument, other functions might take several.

The return ‘value’ of a function need not be numerical (like that of sqrt()),

and it also does not need to be a single item: it can be a set of things, or

even a dataset. We’ll see that when we read data files into R.

Arguments can be anything, not only numbers or filenames, but also other objects. Exactly what each argument means differs per function, and must be looked up in the documentation (see below). Some functions take arguments which may either be specified by the user, or, if left out, take on a default value: these are called options. Options are typically used to alter the way the function operates, such as whether it ignores ‘bad values’, or what symbol to use in a plot. However, if you want something specific, you can specify a value of your choice which will be used instead of the default.

Let’s try a function that can take multiple arguments: round().

round(3.14159)

[1] 3

Here, we’ve called round() with just one argument, 3.14159, and it has

returned the value 3. That’s because the default is to round to the nearest

whole number. If we want more digits we can see how to do that by getting

information about the round function. We can use args(round) or look at the

help for this function using ?round.

args(round)

function (x, digits = 0)

NULL

?round

We see that if we want a different number of digits, we can

type digits=2 or however many we want.

round(3.14159, digits = 2)

[1] 3.14

If you provide the arguments in the exact same order as they are defined you don’t have to name them:

round(3.14159, 2)

[1] 3.14

And if you do name the arguments, you can switch their order:

round(digits = 2, x = 3.14159)

[1] 3.14

It’s good practice to put the non-optional arguments (like the number you’re rounding) first in your function call, and to specify the names of all optional arguments. If you don’t, someone reading your code might have to look up the definition of a function with unfamiliar arguments to understand what you’re doing.

Vectors and Data Types

A vector is the most common and basic data type in R, and is pretty much

the workhorse of R. A vector is composed by a series of values, which can be

either numbers or characters. We can assign a series of values to a vector using

the c() function. For example we can create a vector of animal weights and assign

it to a new object weight_g:

weight_g <- c(50, 60, 65, 82)

weight_g

A vector can also contain characters:

animals <- c("mouse", "rat", "dog")

animals

The quotes around “mouse”, “rat”, etc. are essential here. Without the quotes R

will assume objects have been created called mouse, rat and dog. As these objects

don’t exist in R’s memory, there will be an error message.

There are many functions that allow you to inspect the content of a

vector. length() tells you how many elements are in a particular vector:

length(weight_g)

length(animals)

An important feature of a vector, is that all of the elements are the same type of data.

The function class() indicates what kind of object you are working with:

class(weight_g)

class(animals)

The function str() provides an overview of the structure of an object and its

elements. It is a useful function when working with large and complex

objects:

str(weight_g)

str(animals)

You can use the c() function to add other elements to your vector:

weight_g <- c(weight_g, 90) # add to the end of the vector

weight_g <- c(30, weight_g) # add to the beginning of the vector

weight_g

In the first line, we take the original vector weight_g,

add the value 90 to the end of it, and save the result back into

weight_g. Then we add the value 30 to the beginning, again saving the result

back into weight_g.

We can do this over and over again to grow a vector, or assemble a dataset. As we program, this may be useful to add results that we are collecting or calculating.

An atomic vector is the simplest R data type and is a linear vector of a single type. Above, we saw

2 of the 6 main atomic vector types that R

uses: "character" and "numeric" (or "double"). These are the basic building blocks that

all R objects are built from. The other 4 atomic vector types are:

"logical"forTRUEandFALSE(the boolean data type)"integer"for integer numbers (e.g.,2L, theLindicates to R that it’s an integer)"complex"to represent complex numbers with real and imaginary parts (e.g.,1 + 4i) and that’s all we’re going to say about them"raw"for bitstreams that we won’t discuss further

You can check the type of your vector using the typeof() function and inputting your vector as the argument.

Vectors are one of the many data structures that R uses. Other important

ones are lists (list), matrices (matrix), data frames (data.frame),

factors (factor) and arrays (array).

Exercise

We’ve seen that atomic vectors can be of type character, numeric (or double), integer, and logical. But what happens if we try to mix these types in a single vector?

Solution

R implicitly converts them to all be the same type.

What will happen in each of these examples? (hint: use

class()to check the data type of your objects):num_char <- c(1, 2, 3, "a") num_logical <- c(1, 2, 3, TRUE) char_logical <- c("a", "b", "c", TRUE) tricky <- c(1, 2, 3, "4")Why do you think it happens?

Solution

Vectors can be of only one data type. R tries to convert (coerce) the content of this vector to find a “common denominator” that doesn’t lose any information.

How many values in

combined_logicalare"TRUE"(as a character) in the following example:num_logical <- c(1, 2, 3, TRUE) char_logical <- c("a", "b", "c", TRUE) combined_logical <- c(num_logical, char_logical)Solution

Only one. There is no memory of past data types, and the coercion happens the first time the vector is evaluated. Therefore, the

TRUEinnum_logicalgets converted into a1before it gets converted into"1"incombined_logical.You’ve probably noticed that objects of different types get converted into a single, shared type within a vector. In R, we call converting objects from one class into another class coercion. These conversions happen according to a hierarchy, whereby some types get preferentially coerced into other types. Can you draw a diagram that represents the hierarchy of how these data types are coerced?

Subsetting Vectors

If we want to extract one or several values from a vector, we must provide one or several indices in square brackets. For instance:

animals <- c("mouse", "rat", "dog", "cat")

animals[2]

animals[c(3, 2)]

We can also repeat the indices to create an object with more elements than the original one:

more_animals <- animals[c(1, 2, 3, 2, 1, 4)]

more_animals

R indices start at 1. Programming languages like Fortran, MATLAB, Julia, and R start counting at 1, because that’s what human beings typically do. Languages in the C family (including C++, Java, Perl, and Python) count from 0 because that’s simpler for computers to do.

Conditional Subsetting

Another common way of subsetting is by using a logical vector. TRUE will

select the element with the same index, while FALSE will not:

weight_g <- c(21, 34, 39, 54, 55)

weight_g[c(TRUE, FALSE, FALSE, TRUE, TRUE)]

Typically, these logical vectors are not typed by hand, but are the output of other functions or logical tests. For instance, if you wanted to select only the values above 50:

weight_g > 50 # will return logicals with TRUE for the indices that meet the condition

## so we can use this to select only the values above 50

weight_g[weight_g > 50]

You can combine multiple tests using & (both conditions are true, AND) or |

(at least one of the conditions is true, OR):

weight_g[weight_g > 30 & weight_g < 50]

weight_g[weight_g <= 30 | weight_g == 55]

weight_g[weight_g >= 30 & weight_g == 21]

Here, > for “greater than”, < stands for “less than”, <= for “less than

or equal to”, and == for “equal to”. The double equal sign == is a test for

numerical equality between the left and right hand sides, and should not be

confused with the single = sign, which performs variable assignment (similar

to <-).

A common task is to search for certain strings in a vector. One could use the

“or” operator | to test for equality to multiple values, but this can quickly

become tedious. The function %in% allows you to test if any of the elements of

a search vector are found:

animals <- c("mouse", "rat", "dog", "cat", "cat")

# return both rat and cat

animals[animals == "cat" | animals == "rat"]

# return a logical vector that is TRUE for the elements within animals

# that are found in the character vector and FALSE for those that are not

animals %in% c("rat", "cat", "dog", "duck", "goat", "bird", "fish")

# use the logical vector created by %in% to return elements from animals

# that are found in the character vector

animals[animals %in% c("rat", "cat", "dog", "duck", "goat", "bird", "fish")]

Challenge (Optional)

Can you figure out why

"four" > "five"returnsTRUE?Solution

When using “>” or “<” on strings, R compares their alphabetical order. Here “four” comes after “five”, and therefore is “greater than” it.

Missing Data

As R was designed to analyze datasets, it includes the concept of missing data

(which is uncommon in other programming languages). Missing data are represented

in vectors as NA.

When doing operations on numbers, most functions will return NA if the data

you are working with include missing values. This feature

makes it harder to overlook the cases where you are dealing with missing data.

You can add the argument na.rm = TRUE to calculate the result as if the missing

values were removed (rm stands for ReMoved) first.

heights <- c(2, 4, 4, NA, 6)

mean(heights)

max(heights)

mean(heights, na.rm = TRUE)

max(heights, na.rm = TRUE)

If your data include missing values, you may want to become familiar with the

functions is.na(), na.omit(), and complete.cases(). See below for

examples.

## Extract those elements which are not missing values.

## The ! character is also called the NOT operator

rooms[!is.na(heights)]

## Returns the object with incomplete cases removed.

#The returned object is an atomic vector of type `"numeric"` (or #`"double"`).

na.omit(heights)

## Extract those elements which are complete cases.

#The returned object is an atomic vector of type `"numeric"` (or #`"double"`).

heights[complete.cases(heights)]

Recall that you can use the typeof() function to find the type of your atomic vector.

Exercise

Using this vector of heights in inches, create a new vector,

heights_no_na, with the NAs removed.heights <- c(63, 69, 60, 65, NA, 68, 61, 70, 61, 59, 64, 69, 63, 63, NA, 72, 65, 64, 70, 63, 65)Use the function

median()to calculate the median of theheightsvector.Use R to figure out how many people in the set are taller than 67 inches.

Solution

heights <- c(63, 69, 60, 65, NA, 68, 61, 70, 61, 59, 64, 69, 63, 63, NA, 72, 65, 64, 70, 63, 65) # 1. heights_no_na <- heights[!is.na(heights)] # or heights_no_na <- na.omit(heights) # or heights_no_na <- heights[complete.cases(heights)] # 2. median(heights, na.rm = TRUE) # 3. heights_above_67 <- heights_no_na[heights_no_na > 67] length(heights_above_67)

Now that we have learned how to write scripts, and the basics of R’s data structures, we are ready to start working with the Portal dataset we have been using in the other lessons, and learn about data frames.

Key Points

Access individual values by location using

[].Access arbitrary sets of data using

[c(...)].Use logical operations and logical vectors to access subsets of data.

Starting with Data

Overview

Teaching: 15 min

Exercises: 5 minQuestions

What is a data.frame?

How can I read a complete csv file into R?

How can I get basic summary information about my dataset?

How are dates represented in R and how can I change the format?

Objectives

Load external data from a .csv file into a data frame.

Install and load packages.

Describe what a data frame is.

Summarize the contents of a data frame.

Use indexing to subset specific portions of data frames.

Describe what a factor is.

Convert between strings and factors.

Reorder and rename factors.

Change how character strings are handled in a data frame.

Format dates.

Loading the Survey Data

library(palmerpenguins)

data(package = 'palmerpenguins')

In this palmerpenguins package, there are two datasets. ‘penguins’ is a simplified version of the raw data, curated for instructional use. Throughout the workshop though, we will also work with the raw data, ‘penguins_raw’.

This dataset is hosted by Palmer Station Antarctica LTER, a member of the Long Term Ecological Research Network. You may also learn more about the research and methodology of this particular dataset: Ecological sexual dimorphism and environmental variability within a community of Antarctic penguins (genus Pygoscelis).

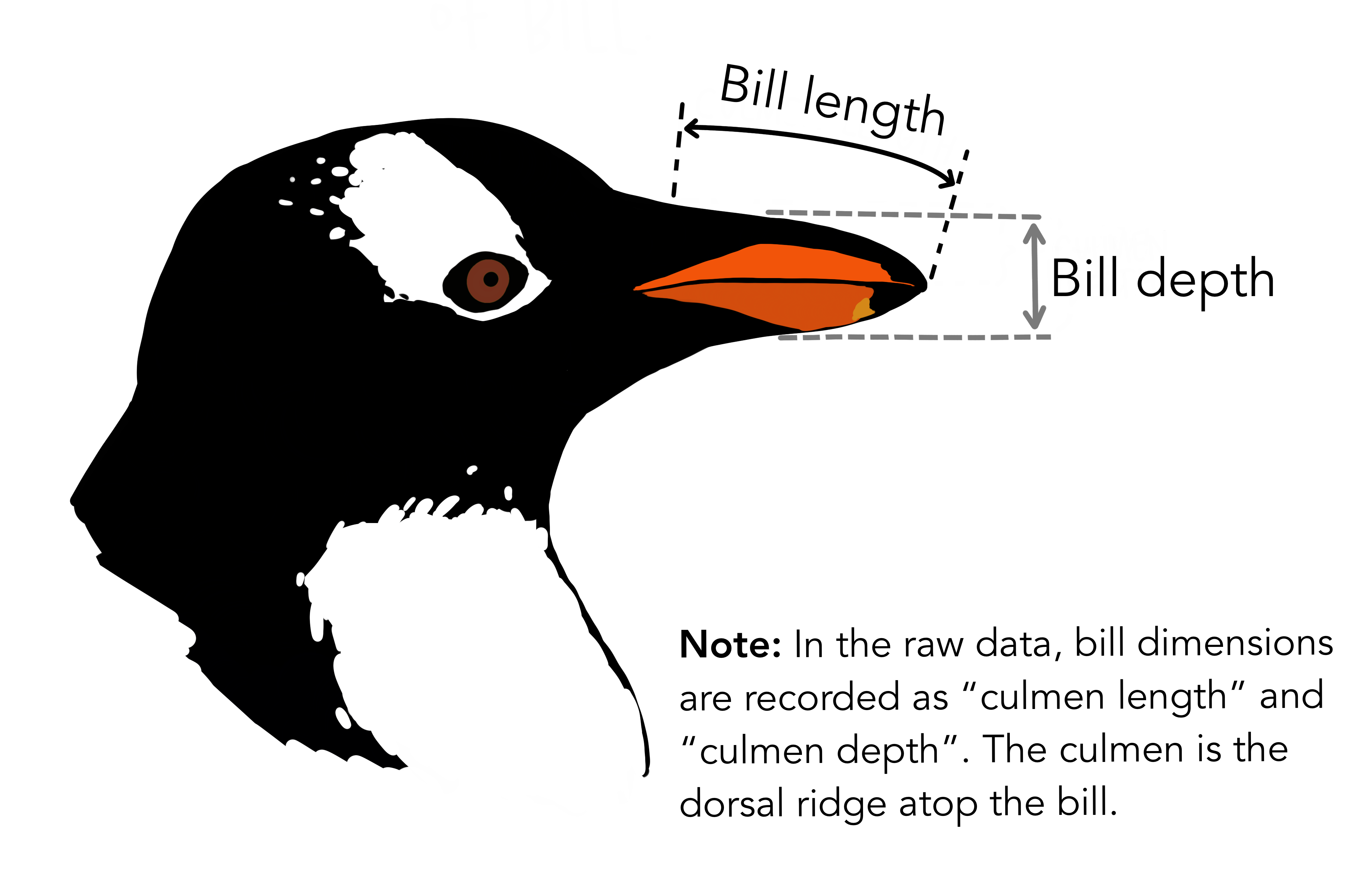

Artwork by @allison_horst

Artwork by @allison_horst

Data Moment: Packages Citation

To get the package’s citation for publication, we can use the

citation()function and input the name of the package,"palmerpenguins".citation("palmerpenguins")Citation increases reproducibility, which benefits both users and producers. Along with getting the proper credit, citations helps producers keep a bibliographic record of publications and usage which references the cited tools and data.

Because datasets are easier to find with consistent, proper citation of packages and data citation, this practice also encourages reuse of the data for new studies.

This data was originally published in:

Gorman KB, Williams TD, Fraser WR (2014). Ecological sexual dimorphism and environmental variability within a community of Antarctic penguins (genus Pygoscelis). PLoS ONE 9(3):e90081. https://doi.org/10.1371/journal.pone.0090081

The dataset is stored as a comma separated value (CSV) file. Each row holds information for a date, and the attributes information can be found in the help:

?penguins

?penguins_raw

Artwork by @allison_horst

Artwork by @allison_horst

Reading the Data into R

The file should be downloaded to the destination you specified. R has not

yet loaded the data from the file into memory. To do this, we can use the

read_csv() function from the tidyverse package.

Packages in R are basically sets of additional functions that let you do more

stuff. The functions we’ve been using so far, like round(), sqrt(), or c()

come built into R. Packages give you access to additional functions beyond base R.

A similar function to read_csv() from the tidyverse package is read.csv() from

base R. We don’t have time to cover their differences but notice that the exact

spelling determines which function is used.

Before you use a package for the first time you need to install it on your

machine, and then you should import it in every subsequent R session when you

need it.

To install the tidyverse package, we can type

install.packages("tidyverse") straight into the console. In fact, it’s better

to write this in the console than in our script for any package, as there’s no

need to re-install packages every time we run the script.

Then, to load the package type:

library(tidyverse)

Now we can use the functions from the tidyverse package.

Let’s use read_csv() to read the data into a data frame

(we will learn more about data frames later):

penguins <- read.csv(path_to_file("penguins.csv"))

If you have a csv file, you may use read_csv to create an object with it. path_to_file() is not nesessary to use read_csv().

When you execute read_csv on a data file, it looks through the first 1000 rows

of each column and guesses its data type. For example, in this dataset,

read_csv() reads flipper_length_mm as int (a numeric data type), and species

as char. You have the option to specify the data type for a column

manually by using the col_types argument in read_csv.

We can see the contents of the first few lines of the data by typing its

name: penguins. By default, this will show you as many rows and columns of

the data as fit on your screen.

If you wanted the first 50 rows, you could type print(penguins, n = 50)

We can also extract the first few lines of this data using the function

head():

head(penguins)

species island bill_length_mm bill_depth_mm

1 Adelie Torgersen 39.1 18.7

2 Adelie Torgersen 39.5 17.4

3 Adelie Torgersen 40.3 18.0

4 Adelie Torgersen NA NA

5 Adelie Torgersen 36.7 19.3

6 Adelie Torgersen 39.3 20.6

flipper_length_mm body_mass_g sex year

1 181 3750 male 2007

2 186 3800 female 2007

3 195 3250 female 2007

4 NA NA <NA> 2007

5 193 3450 female 2007

6 190 3650 male 2007

Unlike the print() function, head() returns the extracted data. You could

use it to assign the first 100 rows of penguins to an object using

penguins_sample <- head(penguins, 100). This can be useful if you want to try

out complex computations on a subset of your data before you apply them to the

whole data set.

There is a similar function that lets you extract the last few lines of the data

set. It is called (you might have guessed it) tail().

To open the dataset in RStudio’s Data Viewer, use the view() function:

view(penguins)

Note

read_csv()assumes that fields are delineated by commas. However, in several countries, the comma is used as a decimal separator and the semicolon (;) is used as a field delineator. If you want to read in this type of files in R, you can use theread_csv2()function. It behaves likeread_csv()but uses different parameters for the decimal and the field separators. There is also theread_tsv()for tab separated data files andread_delim()for less common formats. Check out the help forread_csv()by typing?read_csvto learn more.In addition to the above versions of the csv format, you should develop the habits of looking at and recording some parameters of your csv files. For instance, the character encoding, control characters used for line ending, date format (if the date is not split into three variables), and the presence of unexpected newlines are important characteristics of your data files. Those parameters will ease up the import step of your data in R.

What are Data Frames?

When we loaded the data into R, it got stored as an object of class tibble,

which is a special kind of data frame (the difference is not important for our

purposes, but you can learn more about tibbles

here).

Data frames are the de facto data structure for most tabular data, and what we

use for statistics and plotting.

Data frames can be created by hand, but most commonly they are generated by

functions like read_csv(); in other words, when importing

spreadsheets from your hard drive or the web.

A data frame is the representation of data in the format of a table where the columns are vectors that all have the same length. Because columns are vectors, each column must contain a single type of data (e.g., characters, integers, factors). For example, here is a figure depicting a data frame comprising a numeric, a character, and a logical vector.

We can see this also when inspecting the structure of a data frame

with the function

str():

str(penguins)

Inspecting Data Frames

We already saw how the functions head() and str() can be useful to check the

content and the structure of a data frame. Here is a non-exhaustive list of

functions to get a sense of the content/structure of the data. Let’s try them out!

- Size:

dim(penguins)- returns a vector with the number of rows in the first element, and the number of columns as the second element (the dimensions of the object)nrow(penguins)- returns the number of rowsncol(penguins)- returns the number of columns

- Content:

head(penguins)- shows the first 6 rowstail(penguins)- shows the last 6 rows

- Names:

names(penguins)- returns the column names (synonym ofcolnames()fordata.frameobjects)rownames(penguins)- returns the row names

- Summary:

str(penguins)- structure of the object and information about the class, length and content of each columnsummary(penguins)- summary statistics for each column

Note: most of these functions are “generic”, they can be used on other types of

objects besides data.frame.

Challenge

Based on the output of

str(penguins), can you answer the following questions?

- What is the class of the object

penguins?- How many rows and how many columns are in this object?

Solution

str(penguins)

- class: data frame

- 344 rows, 8 columns

Data Moment: Stuctures

When we look at the stucture of the raw data, we see that it holds 344 observations and 17 variables:

Indexing and Subsetting data frames

Our penguins data frame has rows and columns (it has 2 dimensions). In practice, we may not need the entire data frame; for instance, we may only be interested in a subset of the observations (the rows) or a particular set of variables (the columns). If we want to extract some specific data from it, we need to specify the “coordinates” we want from it. Row numbers come first, followed by column numbers.

# We can extract specific values by specifying row and column indices

# in the format:

# data_frame[row_index, column_index]

# For instance, to extract the first row and column from penguins:

penguins[1, 1]

# First row, fifth column:

penguins[1, 8]

# We can also use shortcuts to select a number of rows or columns at once

# To select all columns, leave the column index blank

# For instance, to select all columns for the first row:

penguins[1, ]

# The same shortcut works for rows --

# To select the first column across all rows:

penguins[, 1]

# An even shorter way to select first column across all rows:

penguins[1] # No comma!

# To select multiple rows or columns, use vectors!

# To select the first three rows of the 5th and 6th column

penguins[c(1, 2, 3), c(5, 6)]

# We can use the : operator to create those vectors for us:

penguins[1:3, 5:6]

# This is equivalent to head_penguins <- head(penguins)

head_penguins <- penguins[1:6, ]

# As we've seen, when working with tibbles

# subsetting with single square brackets ("[]") always returns a data frame.

# If you want a vector, use double square brackets ("[[]]")

# For instance, to get the first column as a vector:

penguins[[1]]

# To get the first value in our data frame:

penguins[[1, 1]]

: is a special function that creates numeric vectors of integers in increasing

or decreasing order, test 1:10 and 10:1 for instance.

You can also exclude certain indices of a data frame using the “-” sign:

penguins[, -1] # The whole data frame, except the first column

penguins[-(7:nrow(penguins)), ] # Equivalent to head(penguins)

Data frames can be subset by calling indices (as shown previously), but also by calling their column names directly:

# As before, using single brackets returns a data frame:

penguins["species"]

penguins[, "species"]

# Double brackets returns a vector:

penguins[["species"]]

# We can also use the $ operator with column names instead of double brackets

# This returns a vector:

penguins$species

In RStudio, you can use the autocompletion feature to get the full and correct names of the columns.

Exercise

Create a

data.frame(penguins_200) containing only the data in row 200 of thepenguinsdataset.Notice how

nrow()gave you the number of rows in the data frame?

- Use that number to pull out just that last row in the data frame.

- Compare that with what you see as the last row using

tail()to make sure it’s meeting expectations.- Pull out that last row using

nrow()instead of the row number.- Create a new data frame (

penguins_last) from that last row.Use

nrow()to extract the row that is in the middle of the data frame. Store the content of this row in an object namedpenguins_middle.Combine

nrow()with the-notation above to reproduce the behavior ofhead(penguins), keeping just the first through 6th rows of the penguins dataset.Solution

## 1. penguins_200 <- penguins[200, ] ## 2. # Saving `n_rows` to improve readability and reduce duplication n_rows <- nrow(penguins) penguins_last <- penguins[n_rows, ] ## 3. penguins_middle <-penguins[n_rows/2, ] ## 4. penguins_head <- penguins[-(7:n_rows), ]

Factors

When we did str(penguins) we saw that several of the columns consist of

integers. The columns species, island, sex, however, are

of the class character.

Arguably, these columns contain categorical data, that is, they can only take on

a limited number of values.

R has a special class for working with categorical data, called factor.

Factors are very useful and actually contribute to making R particularly well

suited to working with data. So we are going to spend a little time introducing

them.

Once created, factors can only contain a pre-defined set of values, known as levels. Factors are stored as integers associated with labels and they can be ordered or unordered. While factors look (and often behave) like character vectors, they are actually treated as integer vectors by R. So you need to be very careful when treating them as strings.

When importing a data frame with read_csv(), the columns that contain text are not automatically coerced (=converted) into the factor data type, but once we have

loaded the data we can do the conversion using the factor() function:

#create a variable that consists of two levels

low_hi <- c("low", "high", "low", "high")

summary(low_hi)

#using factor conversion

low_hi <- factor(low_hi)

We can see that the conversion has worked by using the summary()

function again. This produces a table with the counts for each factor level:

summary(low_hi)

high low

2 2

R will assign 1 to the level "high" and 2 to the level "low" (because

h comes before l, even though the first element in this vector is

"low"). You can see this by using the function levels() and you can find the

number of levels using nlevels():

levels(low_hi)

nlevels(low_hi)

Sometimes, the order of the factors does not matter, other times you might want

to specify the order because it is meaningful (e.g., “low”, “medium”, “high”),

it improves your visualization, or it is required by a particular type of

analysis. Here, one way to reorder our levels in the class vector would be:

low_hi # current order

low_hi <- factor(class, levels = c("low", "high"))

low_hi # after re-ordering

In R’s memory, these factors are represented by integers (1, 2, 3), but are more

informative than integers because factors are self describing: "low",

"high" is more descriptive than 1, 2. Which one is “low”? You wouldn’t

be able to tell just from the integer data. Factors, on the other hand, have

this information built in. It is particularly helpful when there are many levels.

Challenge

Change the columns

speciesandislandin thepenguinsdata frame into a factor.Using the functions you learned before, can you find out… How many rows contain Adelie species?

How many different islands did the research record at?

Solution

penguins$species <- factor(penguins$species) penguins$island <- factor(penguins$island) summary(penguins$species) nlevels(penguins$island)

- There are 152 adelie penguines in the

speciescolumn.- There are 3 unique values in the

islandcolumn

Converting factors

If you need to convert a factor to a character vector, you use

as.character(x).

as.character(low_hi)

In some cases, you may have to convert factors where the levels appear as

numbers (such as concentration levels or years) to a numeric vector. For

instance, in one part of your analysis the years might need to be encoded as

factors (e.g., comparing average weights across years) but in another part of

your analysis they may need to be stored as numeric values (e.g., doing math

operations on the years). This conversion from factor to numeric is a little

trickier. The as.numeric() function returns the index values of the factor,

not its levels, so it will result in an entirely new (and unwanted in this case)

set of numbers. One method to avoid this is to convert factors to characters,

and then to numbers.

Another method is to use the levels() function. Compare:

year_fct <- factor(c(1991, 1992, 1993, 1994, 1995))

as.numeric(year_fct) # Wrong! And there is no warning...

as.numeric(as.character(year_fct)) # Works...

as.numeric(levels(year_fct))[year_fct] # The recommended way.

Notice that in the levels() approach, three important steps occur:

- We obtain all the factor levels using

levels(year_fct) - We convert these levels to numeric values using

as.numeric(levels(year_fct)) - We then access these numeric values using the underlying integers of the

vector

year_fctinside the square brackets

as.numeric(year_fct) # Wrong! And there is no warning...

3 1 2 4 5

as.numeric(as.character(year_fct)) # Works...

[1] 1991 1992 1993 1994 1995

as.numeric(levels(year_fct))[year_fct] # The recommended way.

[1] 1991 1992 1993 1994 1995

Notice that in the recommended levels() approach, three important steps occur:

- We obtain all the factor levels using

levels(year_fct) - We convert these levels to numeric values using

as.numeric(levels(year_fct)) - We then access these numeric values using the underlying integers of the

vector

year_fctinside the square brackets

Renaming factors

When your data is stored as a factor, you can get a quick glance at the number of observations represented by each factor level. Let’s look at the number of males and females observed:

penguins$sex <- factor(penguins$sex)

plot(penguins$sex)

However, as we saw when we used summary(surveys$sex), there are 11 individuals for which the sex information hasn’t been recorded. To show them in the plot, we can turn the missing values into a factor level with the addNA() function. We will also have to give the new factor level a label. We are going to work with a copy of the sex column, so we’re not modifying the working copy of the data frame:

sex <- surveys$sex

levels(sex)

sex <- addNA(sex)

levels(sex)

head(sex)

levels(sex)[3] <- "undetermined"

levels(sex)

Now we can plot the data again, using plot(sex).

Challenge:

Rename “F” and “M” to “female” and “male” respectively. Now that we have renamed the factor level to “undetermined”, can you recreate the barplot such that “undetermined” is first (before “female”)?

Solution

levels(sex)[1:2] <- c("F", "M") sex <- factor(sex, levels = c("undetermined", "female", "male")) plot(sex)

Challenge

We have seen how data frames are created when using the

read.csv(), but they can also be created by hand with thedata.frame()function. There are a few mistakes in this hand-crafteddata.frame, can you spot and fix them? Don’t hesitate to experiment!animal_data <- data.frame(animal = c("dog", "cat", "sea cucumber", "sea urchin"), feel = c("furry", "squishy", "spiny"), weight = c(45, 8 1.1, 0.8))## Challenge: ## There are a few mistakes in this hand-crafted `data.frame`, ## can you spot and fix them? Don't hesitate to experiment! animal_data <- data.frame(animal = c(dog, cat, sea cucumber, sea urchin), feel = c("furry", "squishy", "spiny"), weight = c(45, 8 1.1, 0.8))Can you predict the class for each of the columns in the following example? Check your guesses using

str(country_climate):

- Are they what you expected? Why? Why not?

- What would have been different if we had added

stringsAsFactors = FALSEto this call?- What would you need to change to ensure that each column had the accurate data type?

country_climate <- data.frame( country = c("Canada", "Panama", "South Africa", "Australia"), climate = c("cold", "hot", "temperate", "hot/temperate"), temperature = c(10, 30, 18, "15"), northern_hemisphere = c(TRUE, TRUE, FALSE, "FALSE"), has_kangaroo = c(FALSE, FALSE, FALSE, 1) )## Challenge: ## Can you predict the class for each of the columns in the following ## example? ## Check your guesses using `str(country_climate)`: ## * Are they what you expected? Why? why not? ## * What would have been different if we had added `stringsAsFactors = FALSE` ## to this call? ## * What would you need to change to ensure that each column had the ## accurate data type? country_climate <- data.frame(country = c("Canada", "Panama", "South Africa", "Australia"), climate = c("cold", "hot", "temperate", "hot/temperate"), temperature = c(10, 30, 18, "15"), northern_hemisphere = c(TRUE, TRUE, FALSE, "FALSE"), has_kangaroo = c(FALSE, FALSE, FALSE, 1))Solution

- missing quotations around the names of the animals

- missing one entry in the “feel” column (probably for one of the furry animals)

missing one comma in the weight column

country,climate,temperature, andnorthern_hemisphereare factors;has_kangaroois numeric.- using

stringsAsFactors=FALSEwould have made them character instead of factors- removing the quotes in temperature, northern_hemisphere, and replacing 1 by TRUE in the

has_kangaroocolumn would probably what was originally intended.

Formatting Dates

A common issue that new (and experienced!) R users have is converting date and time information into a variable that is suitable for analyses. One way to store date information is to store each component of the date in a separate column. Using str(), we can confirm that our data frame does indeed have a separate column for day, month, and year, and that each of these columns contains integer values.

str(penguins)

We are going to use the ymd() function from the package lubridate (which belongs to the tidyverse; learn more here). lubridate gets installed as part as the tidyverse installation. When you load the tidyverse (library(tidyverse)), the core packages (the packages used in most data analyses) get loaded. lubridate however does not belong to the core tidyverse, so you have to load it explicitly with library(lubridate)

Start by loading the required package:

library(lubridate)

The lubridate package has many useful functions for working with dates. These can help you extract dates from different string representations, convert between timezones, calculate time differences and more. You can find an overview of them in the lubridate cheat sheet.

Let’s create an object and inspect the structure:

str("2022-01-01")

chr "2022-01-01"

Note that R reads this as a character. Now let’s paste the year, month, and day separately - we get the same result:

# sep indicates the character to use to separate each component

str(paste("2022", "01", "01", sep = "-"))

chr "2022-1-1"

Here we will use the function ymd(), which takes a vector representing year, month, and day, and converts it to a Date vector. Date is a class of data recognized by R as being a date and can be manipulated as such. The argument that the function requires is flexible, but, as a best practice, is a character vector formatted as “YYYY-MM-DD”.

my_date <- ymd(paste("2022", "01", "01", sep = "-"))

str(my_date)

Now lets look at the penguines_raw dataset and extract the column Date Egg.

egg_dates <- penguins_raw$`Date Egg`

str(egg_dates)

Let’s extract our Date Egg column and inspect the structure.

What is “Date Egg”?

?penguins_raw

We are able to pull the year, month, and day from the date values:

egg_yr <- year(egg_dates)

egg_mo <- month(egg_dates)

egg_day <- day(egg_dates)

When we imported the data in R, read_csv() recognized that this column

contained date information. We can use the day(), month() and year()

functions to extract this information from the date, and create new columns in

our data frame to store it:

# we can create dataframes

egg_dates_df <- data.frame(year = egg_yr, month = egg_mo, day = egg_day)

egg_dates_df

year month day

1 2007 11 11

2 2007 11 11

3 2007 11 16

4 2007 11 16

5 2007 11 16

...

These character vectors can be used as the argument for ymd():

ymd(paste(egg_dates_df$year, egg_dates_df$month, egg_dates_df$day, sep = "-"))

In our example above, the Date Egg column was read in correctly as a

Date variable but generally that is not the case. Date columns are often read

in as character variables and one can use the as_date() function to convert

them to the appropriate Date/POSIXctformat.

Let’s say we have a vector of dates in character format:

char_dates <- c("01/01/2020", "02/02/2020", "03/03/2020")

str(char_dates)

chr [1:3] "01/01/2020" "02/02/2020" "03/03/2020"

We can convert this vector to dates as :

as_date(char_dates, format = "%m/%d/%Y")

[1] "2012-07-31" "2014-08-09" "2016-04-30"

Argument format tells the function the order to parse the characters and

identify the month, day and year. The format above is the equivalent of

mm/dd/yyyy. A wrong format can lead to parsing errors or incorrect results.

For example, observe what happens when we use a lower case y instead of upper case Y for the year.

as_date(char_dates, format = "%m/%d/%y")

[1] "2020-01-01" "2020-02-02" "2020-03-03"

Here, the %y part of the format stands for a two-digit year instead of a

four-digit year, and this leads to parsing errors.

Or in the following example, observe what happens when the month and day elements of the format are switched.

as_date(char_dates, format = "%d/%m/%y")

[1] NA "2020-09-08" NA

Since there is no month numbered 30 or 31, the first and third dates cannot be parsed.

We can also use functions ymd(), mdy() or dmy() to convert character

variables to date.

mdy(char_dates)

Data Package Citation

Horst AM, Hill AP, Gorman KB (2020). palmerpenguins: Palmer Archipelago (Antarctica) penguin data. R package version 0.1.0. https://allisonhorst.github.io/palmerpenguins/

Key Points

Use read_csv to read tabular data in R.

Use factors to represent categorical data in R.

Manipulating, analyzing and exporting data with tidyverse

Overview

Teaching: 50 min

Exercises: 30 minQuestions

What are dplyr and tidyr?

How can I select columns and filter rows?

How can I use commands together

How to export data?

Objectives

Describe the purpose of the dplyr and tidyr packages.

Select certain columns in a data frame with the dplyr function select.

Extract certain rows in a data frame according to logical (boolean) conditions with the dplyr function filter .

Link the output of one dplyr function to the input of another function with the ‘pipe’ operator %>%.

Add new columns to a data frame that are functions of existing columns with mutate.

Use the split-apply-combine concept for data analysis.

Use summarize, group_by, and count to split a data frame into groups of observations, apply summary statistics for each group, and then combine the results.

Describe the concept of a wide and a long table format and for which purpose those formats are useful.

Describe what key-value pairs are.

Reshape a data frame from long to wide format and back with the pivot_wider and pivot_longer commands from the tidyr package.

Export a data frame to a .csv file.

Data manipulation using dplyr and tidyr

Bracket subsetting is handy, but it can be cumbersome and difficult to read, especially for complicated operations. Enter dplyr. dplyr is a package for helping with tabular data manipulation. It pairs nicely with tidyr which enables you to swiftly convert between different data formats for plotting and analysis.

The tidyverse package is an “umbrella-package” that installs tidyr, dplyr, and several other useful packages for data analysis, such as ggplot2, tibble, etc.

The tidyverse package tries to address 3 common issues that arise when doing data analysis in R:

- The results from a base R function sometimes depend on the type of data.

- R expressions are used in a non standard way, which can be confusing for new learners.

- The existence of hidden arguments having default operations that new learners are not aware of.

You should already have installed and loaded the tidyverse package. If you haven’t already done so, you can type install.packages("tidyverse") straight into the console. Then, type library(tidyverse) to load the package.

library(tidyverse)

What are dplyr and tidyr?

The package dplyr provides helper tools for the most common data manipulation tasks. It is built to work directly with data frames, with many common tasks optimized by being written in a compiled language (C++). An additional feature is the ability to work directly with data stored in an external database. The benefits of doing this are that the data can be managed natively in a relational database, queries can be conducted on that database, and only the results of the query are returned.

This addresses a common problem with R in that all operations are conducted in-memory and thus the amount of data you can work with is limited by available memory. The database connections essentially remove that limitation in that you can connect to a database of many hundreds of GB, conduct queries on it directly, and pull back into R only what you need for analysis.

The package tidyr addresses the common problem of wanting to reshape your data for plotting and usage by different R functions. For example, sometimes we want data sets where we have one row per measurement. Other times we want a data frame where each measurement type has its own column, and rows are instead more aggregated groups (e.g., a time period, an experimental unit like a plot or a batch number). Moving back and forth between these formats is non-trivial, and tidyr gives you tools for this and more sophisticated data manipulation.

To learn more about dplyr and tidyr after the workshop, you may want to check out this handy data transformation with dplyr cheatsheet and this one about tidyr.

As before, we’ll load in our data package using the library() and data() functions.

library(palmerpenguins)

data(penguins)

## inspect the data

str(penguins)

## preview the data

View(penguins)

Next, we’re going to learn some of the most common dplyr functions:

select(): subset columnsfilter(): subset rows on conditionsmutate(): create new columns by using information from other columnsgroup_by()andsummarize(): create summary statistics on grouped dataarrange(): sort resultscount(): count discrete values

Selecting columns and filtering rows

To select columns of a data frame, use select(). The first argument to this function is the data frame (penguins), and the subsequent arguments are the columns to keep.

select(penguins, island, species, body_mass_g)

To select all columns except certain ones, put a “-” in front of the variable to exclude it.

select(penguins, -year, -species)

This will select all the variables in penguins except year and species.

To choose rows based on a specific criterion, use filter():

filter(penguins, year == 2007)

Pipes

What if you want to select and filter at the same time? There are three ways to do this: use intermediate steps, nested functions, or pipes.

With intermediate steps, you create a temporary data frame and use that as input to the next function, like this:

penguins2 <- filter(penguins, body_mass_g < 3000)

penguins_sml <- select(penguins2, species, sex, body_mass_g)

This is readable, but can clutter up your workspace with lots of objects that you have to name individually. With multiple steps, that can be hard to keep track of.

You can also nest functions (i.e. one function inside of another), like this:

penguins_sml <- select(filter(penguins, body_mass_g < 3000), species, sex, body_mass_g)

This is handy, but can be difficult to read if too many functions are nested, as R evaluates the expression from the inside out (in this case, filtering, then selecting).

The last option, pipes, are a recent addition to R. Pipes let you take the output of one function and send it directly to the next, which is useful when you need to do many things to the same dataset. Pipes in R look like %>% and are made available via the magrittr package, installed automatically with dplyr. If you use RStudio, you can type the pipe with Ctrl + Shift + M if you have a PC or Cmd + Shift + M if you have a Mac.

penguins %>%

filter(body_mass_g < 3000) %>%

select(species, sex, body_mass_g)

In the above code, we use the pipe to send the penguins dataset first through filter() to keep rows where body_mass_g is less than 5, then through select() to keep only the species, sex, and body_mass_g columns. Since %>% takes the object on its left and passes it as the first argument to the function on its right, we don’t need to explicitly include the data frame as an argument to the filter() and select() functions any more.

Some may find it helpful to read the pipe like the word “then.” For instance, in the example above, we took the data frame penguins, then we filtered for rows with body_mass_g < 5, then we selected columns species, sex, and body_mass_g. The dplyr functions by themselves are somewhat simple, but by combining them into linear workflows with the pipe we can accomplish more complex manipulations of data frames.

If we want to create a new object with this smaller version of the data, we can assign it a new name:

penguins_sml <- penguins %>%

filter(body_mass_g < 3000) %>%

select(species, sex, body_mass_g)

penguins_sml

Note that the final data frame is the leftmost part of this expression.

Mutate

Frequently you’ll want to create new columns based on the values in existing columns, for example to do unit conversions, or to find the ratio of values in two columns. For this we’ll use mutate().

To create a new column that reads body mass in in kilogram:

penguins %>%

mutate(body_mass_kg = body_mass_g / 1000)

You can also create a second new column based on the first new column within the same call of mutate():

penguins %>%

mutate(body_mass_kg = body_mass_g / 1000,

body_mass_lb = body_mass_kg * 2.2)

If this runs off your screen and you just want to see the first few rows, you can use a pipe to view the head() of the data. (Pipes work with non-dplyr functions, too, as long as the dplyr or magrittr package is loaded).

penguins %>%

mutate(body_mass_kg = body_mass_g / 1000,

body_mass_lb = body_mass_kg * 2.2) %>%

head()

In the first few rows of the output we see some NA’s, so if we wanted to remove those we could insert a filter() in the chain:

penguins %>%

filter(!is.na(body_mass_g)) %>%

mutate(body_mass_kg = body_mass_g / 1000,

body_mass_lb = body_mass_kg * 2.2) %>%

head()

is.na() is a function that determines whether something is an NA. The ! symbol negates the result, so we’re asking for every row where weight is not an NA.

Challenge

Create a new data frame from the

penguinsdata that meets the following criteria: contains only thespeciescolumn and a new column calledflipper_length_cmcontaining the length of the penguin flipper values (currently in mm) converted to centimeters. In thisflipper_length_cmcolumn, there are no NAs and all values are less than 20. Hint: think about how the commands should be ordered to produce this data frame!Solution

penguins_flipper_length_cm <- penguins %>% filter(!is.na(penguins_flipper_length_mm)) %>% mutate(flipper_length_cm = penguins_flipper_length_mm / 10) %>% filter(flipper_length_cm < 20) %>% select(species, flipper_length_cm)

Split-apply-combine data analysis and the summarize() function

Many data analysis tasks can be approached using the split-apply-combine paradigm: split the data into groups, apply some analysis to each group, and then combine the results. Key functions of dplyr for this workflow are group_by() and summarize().

The group_by() and summarize() functions

group_by() is often used together with summarize(), which collapses each group into a single-row summary of that group. group_by() takes as arguments the column names that contain the categorical variables for which you want to calculate the summary statistics. So to compute the mean body_mass_g by sex:

penguins %>%

group_by(sex) %>%

summarize(mean_body_mass_g = mean(body_mass_g))

You can also group by multiple columns:

penguins %>%

group_by(sex, species) %>%

summarize(mean_body_mass_g = mean(body_mass_g))

From both outputs, we see missing data. The value NA appears an additional, extraneous row.

# where are the NA's coming from?

penguins %>%

filter(is.na(sex))

is.na() is a function that determines whether something is an NA. The ! symbol negates the result, so we’re asking for every row where sex is not an NA. We can pipe this filter so that we calculate the mean body mass after missing values have been removed.

# filter out observations with missing body mass measurements and unidentified sex

penguins %>%

filter(!is.na(body_mass_g), !is.na(sex)) %>%

group_by(sex, species) %>%

summarize(mean_body_mass_g = mean(body_mass_g))

penguins %>%

filter(!is.na(body_mass_g), !is.na(sex)) %>%

group_by(sex, species) %>%

summarize(mean_body_mass_g = mean(body_mass_g),

min_body_mass_g = min(body_mass_g))

When calculating summary values, we can also look at the amount of cases that went into the calculation. The n() function returns the number of observations in each group.

penguins %>%

filter(!is.na(body_mass_g), !is.na(sex)) %>%

group_by(sex, species) %>%

summarize(mean_body_mass_g = mean(body_mass_g),

min_body_mass_g = min(body_mass_g),

num_cases = n())

To keep only rows where no attribute values are missing, we can pipe drop_na() to the data so that the following piped commands are implemented on complete records.

# drop all rows with any NA's

penguins %>%

drop_na() %>%

group_by(sex, species) %>%

summarize(mean_body_mass_g = mean(body_mass_g),

min_body_mass_g = min(body_mass_g))

It is sometimes useful to rearrange the result of a query to inspect the values. For instance, we can sort on min_body_mass_g to put the lighter species first:

penguins %>%

drop_na() %>%

group_by(sex, species) %>%

summarize(mean_body_mass_g = mean(body_mass_g),

min_body_mass_g = min(body_mass_g)) %>%

arrange(min_body_mass_g)

Counting

When working with data, we often want to know the number of observations found for each factor or combination of factors. For this task, dplyr provides count(). For example, if we wanted to count the number of rows of data for each sex, we would do:

penguins %>%

count(sex)

The count() function is shorthand for something we’ve already seen: grouping by a variable, and summarizing it by counting the number of observations in that group. In other words, penguins %>% count() is equivalent to:

penguins %>%

group_by(sex) %>%

summarise(count = n())

For convenience, count() provides the sort argument:

penguins %>%

count(sex, sort = TRUE)

Previous example shows the use of count() to count the number of rows/observations for one factor (i.e., sex). If we wanted to count combination of factors, such as sex and species, we would specify the first and the second factor as the arguments of count():

penguins %>%

count(sex, species)

With the above code, we can proceed with arrange() to sort the table according to a number of criteria so that we have a better comparison. For instance, we might want to arrange the table above in (i) an alphabetical order of the levels of the species and (ii) in descending order of the count:

penguins %>%

count(sex, species) %>%

arrange(species, desc(n))

From the table above, we may learn that, for instance, there are 6 observations of the Adelie species and 5 observations of the Gentoo species that are not specified for its sex (i.e. NA).

Challenge

How many penguins are in each island surveyed?

Use group_by() and summarize() to find the mean, min, and max bill length for each species (using

species). Also add the number of observations (hint: usen()).What was the heaviest animal measured in each year? Return the columns year, island, species, and body_mass_g.

Solution

1.

penguins %>% count(island)2.

penguins %>% filter(!is.na(bill_length_mm)) %>% group_by(species) %>% summarize( mean_bill_length_mm = mean(bill_length_mm), min_bill_length_mm = min(bill_length_mm), max_bill_length_mm = max(bill_length_mm), num_cases = n() )3.

penguins %>% filter(!is.na(body_mass_g)) %>% group_by(year) %>% filter(body_mass_g == max(body_mass_g)) %>% select(year, island, species, body_mass_g) %>% arrange(year)

Reshaping with pivot_longer and pivot_wider

In the spreadsheet lesson, we discussed how to structure our data leading to the four rules defining a tidy dataset:

- Each variable has its own column

- Each observation has its own row

- Each value must have its own cell

- Each type of observational unit forms a table Here we examine the fourth rule: Each type of observational unit forms a table.

In penguins, the rows of penguins contain the values of variables associated with each record (the unit), values such as the body_mass_g or sex of each animal associated with each record. What if instead of comparing records, we wanted to compare the different mean body_mass_g of each sex between islands? (Ignoring island for simplicity).

We’d need to create a new table where each row (the unit) is comprised of values of variables associated with each island. In practical terms this means the values in sex would become the names of column variables and the cells would contain the values of the mean body_mass_g observed on each island.

Having created a new table, it is therefore straightforward to explore the relationship between the body_mass_g of different penguins within, and between, the islands. The key point here is that we are still following a tidy data structure, but we have reshaped the data according to the observations of interest: average sex body_mass_g per island instead of recordings per date.

The opposite transformation would be to transform column names into values of a variable.

We can do both these of transformations with two tidyr functions, pivot_wider() and pivot_longer().

These may sound like dramatically different data layouts, but there are some tools that make transitions between these layouts more straightforward than you might think! The gif below shows how these two formats relate to each other, and gives you an idea of how we can use R to shift from one format to the other.

Pivoting from long to wide format

pivot_wider() takes three principal arguments:

- the data

- the names_from column variable whose values will become new column names.

- the values_from column variable whose values will fill the new column variables.

Further arguments include

values_fillwhich, if set, fills in missing values with the value provided.

Let’s use pivot_wider() to transform penguins to find the mean body_mass_g of each sex in each island over the entire survey period. We use filter(), group_by() and summarise() to filter our observations and variables of interest, and create a new variable for the mean_body_mass_g.

penguins_gw <- penguins %>%

filter(!is.na(body_mass_g)) %>%

group_by(island, sex) %>%

summarize(mean_body_mass_g = mean(body_mass_g))

str(penguins_gw)

This yields penguins_gw where the observations for each island are distributed across multiple rows, 9 observations of 3 variables (island, sex, and mean_body_mass_g). Using pivot_wider() with the names from sex and with values from mean_body_mass_g this becomes 3 observations of 2 variables, one row for each island and one column for each sex).

penguins_wide <- penguins_gw %>%

pivot_wider(names_from = sex, values_from = mean_body_mass_g)

str(penguins_wide)

penguins_wide

We could now compare between the mean_body_mass_g values between penguin sex and different islands. Note that since we did not filter NA values from sex, we have a third column. We can use the rename() function to provide this column a more descriptive name.

# fix that funny name

penguins_wide <- penguins_gw %>%

pivot_wider(names_from = sex,

values_from = mean_body_mass_g) %>%

rename(unknown = `NA`) # "unknown" is the new column name

penguins_wide

Pivoting from wide to long format

The opposing situation could occur if we had been provided with data in the form of penguins_wide, where the sex names are column names, but we wish to treat them as values of a sex variable instead.

In this situation we are reshaping the column names and turning them into a pair of new variables. One variable represents the column names as values, and the other variable contains the values previously associated with the column names.

pivot_longer() takes four principal arguments:

- the data

- the names_to column variable we wish to create from column names.

- the values_to column variable we wish to create and fill with values.

- cols are the name of the columns we use to make this pivot (or to drop).

To recreate penguins_gw from penguins_wide we would create a names variable called sex and value variable called mean_body_mass_g.

In pivoting longer, we also need to specify what columns to reshape. If the columns are directly adjacent as they are here, we don’t even need to list the all out: we can just use the : operator!

penguins_long <- penguins_wide %>%

pivot_longer(names_to = "sex",

values_to = "mean_body_mass_g",

cols = female:unknown) #includes columns "female", "male", and "unknown"

penguins_long

Pivoting wider and then longer can be a useful way to balance out a dataset so that every replicate has the same composition.

We could also have used a specification for what columns to exclude. In this next example, we will use all columns except island for the names variable. By using the minus sign in the cols argument, we omit island from being reshaped

penguins_wide %>%

pivot_longer(names_to = "sex", values_to = "mean_body_mass_g", cols = -island) %>%

head()

Challenge

Reshape the

penguinsdata frame withyearas columns,islandas rows, and the number ofspeciesperislandas the values. You will need to summarize before reshaping, and use the functionn_distinct()to get the number of unique species within a particular chunk of data. It’s a powerful function! See?n_distinctfor more.Now take that data frame and

pivot_longer()it, so each row is a uniqueislandby year combination.The penguins data set has two measurement columns:

bill_length_mmandbody_mass_g. This makes it difficult to do things like look at the relationship between mean values of each measurement per year in differentislandtypes. Let’s walk through a common solution for this type of problem. First, usepivot_longer()to create a dataset where we have a names column called measurement and a value column that takes on the value of eitherbill_length_mmorbody_mass_g. Hint: You’ll need to specify which columns will be part of the reshape.With this new data set, calculate the average of each measurement in each year for each different island. Then

pivot_wider()them into a data set with a column forbill_length_mmandbody_mass_g. Hint: You only need to specify the names and values columns forpivot_wider().Solution

1.

penguins_wide_species <- penguins %>% group_by(island, year) %>% summarize(n_species = n_distinct(species)) %>% pivot_wider(names_from = year, values_from = n_species) head(penguins_wide_species)2.

penguins_wide_species %>% pivot_longer(names_to = "year", values_to = "n_species", cols = -island)3.

penguins_long <- penguins %>% pivot_longer(names_to = "measurement", values_to = "value", cols = c(bill_length_mm, body_mass_g))4.

penguins_long %>% group_by(year, measurement, island) %>% summarize(mean_value = mean(value, na.rm=TRUE)) %>% pivot_wider(names_from = measurement, values_from = mean_value)

Exporting data

Now that you have learned how to use dplyr to extract information from or summarize your raw data, you may want to export these new data sets to share them with your collaborators or for archival.

Similar to the read_csv() function used for reading CSV files into R, there is a write_csv() function that generates CSV files from data frames.

Before using write_csv(), we are going to create a new folder, data, in our working directory that will store this generated dataset. We don’t want to write generated datasets in the same directory as our raw data. It’s good practice to keep them separate. The data_raw folder should only contain the raw, unaltered data, and should be left alone to make sure we don’t delete or modify it. In contrast, our script will generate the contents of the data directory, so even if the files it contains are deleted, we can always re-generate them.

In preparation for our next lesson on plotting, we are going to prepare a cleaned up version of the data set that doesn’t include any missing data.

Let’s start by removing observations of penguins for which there is an attribute that is missing or has not been determined:

# only use complete records

penguins_complete <- penguins %>%

drop_na()

penguins_raw_complete <- penguins_raw %>%

drop_na()

To make sure that everyone has the same data set, check that penguins_complete and penguins_raw_complete has rows and columns by using dim().

Now that our data set is ready, we can save it as a CSV file in our data folder. We should create a data folder in our RStudio Files panel before exporting these.

write_csv(penguins_complete, file = "data/penguins_complete.csv")

write_csv(penguins_raw_complete, file = "data/penguins_raw_complete.csv")

In our data folder, we now have two csv’s. A csv can be read into R as a dataframe using the read_csv() function.

We can also use glimpse() to see all data columns.

pcom <- read_csv("penguins_complete.csv")

glimpse(pcom)

glimpse(penguins_complete)

Key Points

Use the dplyr package to manipulate dataframes.

Use select() to choose variables from a dataframe.

Use filter() to choose data based on values.

Use group_by() and summarize() to work with subsets of data.

Use mutate() to create new variables.

Use the tidyr package to change the layout of dataframes.

Use pivot_wider() to go from long to wide format.

Use pivot_longer() to go from wide to long format.

Data Visualisation with ggplot2

Overview

Teaching: 80 min

Exercises: 35 minQuestions

What are the components of a ggplot?

How do I create scatterplots, boxplots, histograms, and more?

How can I change the aesthetics (ex. colour, transparency) of my plot?

How can I create multiple plots at once?

Objectives

Produce scatter plots, boxplots, and time series plots using ggplot.

Set universal plot settings.

Describe what faceting is and apply faceting in ggplot.

Modify the aesthetics of an existing ggplot plot (including axis labels and color).

Build complex and customized plots from data in a data frame.

We start by loading the required packages. ggplot2 is included in the tidyverse package.

library(tidyverse)

library(palmerpenguins)

If not still in the workspace, load the data we saved in the previous lesson.

data(penguins)

# alternatively, we can load our csv's

penguins_comp <- read_csv("data/penguins_complete.csv")

penguins_raw_comp <- read_csv("data/penguins_raw_complete.csv")

Plotting with ggplot2

ggplot2 is a plotting package that provides helpful commands to create complex plots from data in a data frame. It provides a more programmatic interface for specifying what variables to plot, how they are displayed, and general visual properties. Therefore, we only need minimal changes if the underlying data change or if we decide to change from a bar plot to a scatterplot. This helps in creating publication quality plots with minimal amounts of adjustments and tweaking.

ggplot2 refers to the name of the package itself. When using the package we use the function ggplot() to generate the plots, and so references to using the function will be referred to as ggplot() and the package as a whole as ggplot2

ggplot2 plots work best with data in the ‘long’ format, i.e., a column for every variable, and a row for every observation. Well-structured data will save you lots of time when making figures with ggplot2

ggplot graphics are built layer by layer by adding new elements. Adding layers in this fashion allows for extensive flexibility and customization of plots.