Content from Before we start

Last updated on 2024-02-21 | Edit this page

Overview

Questions

- What is Python and why should I learn it?

Objectives

- Present motivations for using Python.

- Organize files and directories for a set of analyses as a Python project, and understand the purpose of the working directory.

- How to work with Jupyter Notebook and Spyder.

- Know where to find help.

- Demonstrate how to provide sufficient information for troubleshooting with the Python user community.

What is Python?

Python is a general purpose programming language that supports rapid development of data analytics applications. The word “Python” is used to refer to both, the programming language and the tool that executes the scripts written in Python language.

Its main advantages are:

- Free

- Open-source

- Available on all major platforms (macOS, Linux, Windows)

- Supported by Python Software Foundation

- Supports multiple programming paradigms

- Has large community

- Rich ecosystem of third-party packages

So, why do you need Python for data analysis?

Easy to learn: Python is easier to learn than other programming languages. This is important because lower barriers mean it is easier for new members of the community to get up to speed.

-

Reproducibility: Reproducibility is the ability to obtain the same results using the same dataset(s) and analysis.

Data analysis written as a Python script can be reproduced on any platform. Moreover, if you collect more or correct existing data, you can quickly re-run your analysis!

An increasing number of journals and funding agencies expect analyses to be reproducible, so knowing Python will give you an edge with these requirements.

-

Versatility: Python is a versatile language that integrates with many existing applications to enable something completely amazing. For example, one can use Python to generate manuscripts, so that if you need to update your data, analysis procedure, or change something else, you can quickly regenerate all the figures and your manuscript will be updated automatically.

Python can read text files, connect to databases, and many other data formats, on your computer or on the web.

Interdisciplinary and extensible: Python provides a framework that allows anyone to combine approaches from different research (but not only) disciplines to best suit your analysis needs.

Python has a large and welcoming community: Thousands of people use Python daily. Many of them are willing to help you through mailing lists and websites, such as Stack Overflow and Anaconda community portal.

Free and Open-Source Software (FOSS)… and Cross-Platform: We know we have already said that but it is worth repeating.

Knowing your way around Anaconda

Anaconda distribution of Python includes a lot of its popular packages, such as the IPython console, Jupyter Notebook, and Spyder IDE. Have a quick look around the Anaconda Navigator. You can launch programs from the Navigator or use the command line.

The Jupyter Notebook is an open-source web application that allows you to create and share documents that allow one to create documents that combine code, graphs, and narrative text. Spyder is an Integrated Development Environment that allows one to write Python scripts and interact with the Python software from within a single interface.

Anaconda comes with a package manager called conda used to install and update additional packages.

Research Project: Best Practices

It is a good idea to keep a set of related data, analyses, and text in a single folder. All scripts and text files within this folder can then use relative paths to the data files. Working this way makes it a lot easier to move around your project and share it with others.

Organizing your working directory

Using a consistent folder structure across your projects will help you keep things organized, and will also make it easy to find/file things in the future. This can be especially helpful when you have multiple projects. In general, you may wish to create separate directories for your scripts, data, and documents.

data/: Use this folder to store your raw data. For the sake of transparency and provenance, you should always keep a copy of your raw data. If you need to cleanup data, do it programmatically (i.e. with scripts) and make sure to separate cleaned up data from the raw data. For example, you can store raw data in files./data/raw/and clean data in./data/clean/.documents/: Use this folder to store outlines, drafts, and other text.code/: Use this folder to store your (Python) scripts for data cleaning, analysis, and plotting that you use in this particular project.

You may need to create additional directories depending on your

project needs, but these should form the backbone of your project’s

directory. For this workshop, we will need a data/ folder

to store our raw data, and we will later create a

data_output/ folder when we learn how to export data as CSV

files.

What is Programming and Coding?

Programming is the process of writing “programs” that a computer can execute and produce some (useful) output. Programming is a multi-step process that involves the following steps:

- Identifying the aspects of the real-world problem that can be solved computationally

- Identifying (the best) computational solution

- Implementing the solution in a specific computer language

- Testing, validating, and adjusting the implemented solution.

While “Programming” refers to all of the above steps, “Coding” refers to step 3 only: “Implementing the solution in a specific computer language”. It’s important to note that “the best” computational solution must consider factors beyond the computer. Who is using the program, what resources/funds does your team have for this project, and the available timeline all shape and mold what “best” may be.

If you are working with Jupyter notebook:

You can type Python code into a code cell and then execute the code

by pressing Shift+Return. Output will be printed

directly under the input cell. You can recognise a code cell by the

In[ ]: at the beginning of the cell and output by

Out[ ]:. Pressing the + button in the menu

bar will add a new cell. All your commands as well as any output will be

saved with the notebook.

If you are working with Spyder:

You can either use the console or use script files (plain text files that contain your code). The console pane (in Spyder, the bottom right panel) is the place where commands written in the Python language can be typed and executed immediately by the computer. It is also where the results will be shown. You can execute commands directly in the console by pressing Return, but they will be “lost” when you close the session. Spyder uses the IPython console by default.

Since we want our code and workflow to be reproducible, it is better to type the commands in the script editor, and save them as a script. This way, there is a complete record of what we did, and anyone (including our future selves!) has an easier time reproducing the results on their computer.

Spyder allows you to execute commands directly from the script editor by using the run buttons on top. To run the entire script click Run file or press F5, to run the current line click Run selection or current line or press F9, other run buttons allow to run script cells or go into debug mode. When using F9, the command on the current line in the script (indicated by the cursor) or all of the commands in the currently selected text will be sent to the console and executed.

At some point in your analysis you may want to check the content of a variable or the structure of an object, without necessarily keeping a record of it in your script. You can type these commands and execute them directly in the console. Spyder provides the Ctrl+Shift+E and Ctrl+Shift+I shortcuts to allow you to jump between the script and the console panes.

If Python is ready to accept commands, the IPython console shows an

In [..]: prompt with the current console line number in

[]. If it receives a command (by typing, copy-pasting or

sent from the script editor), Python will execute it, display the

results in the Out [..]: cell, and come back with a new

In [..]: prompt waiting for new commands.

If Python is still waiting for you to enter more data because it

isn’t complete yet, the console will show a ...: prompt. It

means that you haven’t finished entering a complete command. This can be

because you have not typed a closing parenthesis (),

], or }) or quotation mark. When this happens,

and you thought you finished typing your command, click inside the

console window and press Esc; this will cancel the incomplete

command and return you to the In [..]: prompt.

How to learn more after the workshop?

The material we cover during this workshop will give you an initial taste of how you can use Python to analyze data for your own research. However, you will need to learn more to do advanced operations such as cleaning your dataset, using statistical methods, or creating beautiful graphics. The best way to become proficient and efficient at Python, as with any other tool, is to use it to address your actual research questions. As a beginner, it can feel daunting to have to write a script from scratch, and given that many people make their code available online, modifying existing code to suit your purpose might make it easier for you to get started.

Seeking help

- check under the Help menu

- type

help() - type

?objectorhelp(object)to get information about an object - Python documentation

- Pandas documentation

Finally, a generic Google or internet search “Python task” will often either send you to the appropriate module documentation or a helpful forum where someone else has already asked your question.

I am stuck… I get an error message that I don’t understand. Start by googling the error message. However, this doesn’t always work very well, because often, package developers rely on the error catching provided by Python. You end up with general error messages that might not be very helpful to diagnose a problem (e.g. “subscript out of bounds”). If the message is very generic, you might also include the name of the function or package you’re using in your query.

However, you should check Stack Overflow. Search using the

[python] tag. Most questions have already been answered,

but the challenge is to use the right words in the search to find the

answers: https://stackoverflow.com/questions/tagged/python?tab=Votes

Asking for help

The key to receiving help from someone is for them to rapidly grasp your problem. You should make it as easy as possible to pinpoint where the issue might be.

Try to use the correct words to describe your problem. For instance, a package is not the same thing as a library. Most people will understand what you meant, but others have really strong feelings about the difference in meaning. The key point is that it can make things confusing for people trying to help you. Be as precise as possible when describing your problem.

If possible, try to reduce what doesn’t work to a simple reproducible example. If you can reproduce the problem using a very small data frame instead of your 50,000 rows and 10,000 columns one, provide the small one with the description of your problem. When appropriate, try to generalize what you are doing so even people who are not in your field can understand the question. For instance, instead of using a subset of your real dataset, create a small (3 columns, 5 rows) generic one.

Where to ask for help?

- The person sitting next to you during the workshop. Don’t hesitate to talk to your neighbor during the workshop, compare your answers, and ask for help. You might also be interested in organizing regular meetings following the workshop to keep learning from each other.

- Your friendly colleagues: if you know someone with more experience than you, they might be able and willing to help you.

- Stack Overflow: if your question hasn’t been answered before and is well crafted, chances are you will get an answer in less than 5 min. Remember to follow their guidelines on how to ask a good question.

- Python mailing lists

More resources

Key Points

- Python is an open source and platform independent programming language.

- Jupyter Notebook and the Spyder IDE are great tools to code in and interact with Python. With the large Python community it is easy to find help on the internet.

Content from Short Introduction to Programming in Python

Last updated on 2024-02-21 | Edit this page

Overview

Questions

- How do I program in Python?

- How can I represent my data in Python?

Objectives

- Describe the advantages of using programming vs. completing repetitive tasks by hand.

- Define the following data types in Python: strings, integers, and floats.

- Perform mathematical operations in Python using basic operators.

- Define the following as it relates to Python: lists, tuples, and dictionaries.

Interpreter

Python is an interpreted language which can be used in two ways:

- “Interactively”: when you use it as an “advanced calculator”

executing one command at a time. To start Python in this mode, execute

pythonon the command line:

OUTPUT

Python 3.5.1 (default, Oct 23 2015, 18:05:06)

[GCC 4.8.3] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>>Chevrons >>> indicate an interactive prompt in

Python, meaning that it is waiting for your input.

OUTPUT

4OUTPUT

Hello World- “Scripting” Mode: executing a series of “commands” saved in text

file, usually with a

.pyextension after the name of your file:

OUTPUT

Hello WorldIntroduction to variables in Python

Assigning values to variables

One of the most basic things we can do in Python is assign values to variables:

PYTHON

text = "Data Carpentry" # An example of assigning a value to a new text variable,

# also known as a string data type in Python

number = 42 # An example of assigning a numeric value, or an integer data type

pi_value = 3.1415 # An example of assigning a floating point value (the float data type)Here we’ve assigned data to the variables text,

number and pi_value, using the assignment

operator =. To review the value of a variable, we can type

the name of the variable into the interpreter and press

Return:

OUTPUT

"Data Carpentry"Everything in Python has a type. To get the type of something, we can

pass it to the built-in function type:

OUTPUT

<class 'str'>OUTPUT

<class 'int'>OUTPUT

<class 'float'>The variable text is of type str, short for

“string”. Strings hold sequences of characters, which can be letters,

numbers, punctuation or more exotic forms of text (even emoji!).

We can also see the value of something using another built-in

function, print:

OUTPUT

Data CarpentryOUTPUT

42This may seem redundant, but in fact it’s the only way to display output in a script:

example.py

PYTHON

# A Python script file

# Comments in Python start with #

# The next line assigns the string "Data Carpentry" to the variable "text".

text = "Data Carpentry"

# The next line does nothing!

text

# The next line uses the print function to print out the value we assigned to "text"

print(text)Running the script

OUTPUT

Data CarpentryNotice that “Data Carpentry” is printed only once.

Tip: print and type are

built-in functions in Python. Later in this lesson, we will introduce

methods and user-defined functions. The Python documentation is

excellent for reference on the differences between them.

Tip: When editing scripts like example.py, be careful not to use word processors such as MS Word, as they may introduce extra information that confuses Python. In this lesson we will be using either Jupyter notebooks or the Spyder IDE, and for your everyday work you may also choose any text editor such as Notepad++, VSCode, Vim, or Emacs.

Operators

We can perform mathematical calculations in Python using the basic

operators +, -, /, *, %:

OUTPUT

4OUTPUT

42OUTPUT

65536OUTPUT

3We can also use comparison and logic operators:

<, >, ==, !=, <=, >= and statements of identity

such as and, or, not. The data type returned by this is

called a boolean.

OUTPUT

FalseOUTPUT

TrueOUTPUT

TrueOUTPUT

FalseSequences: Lists and Tuples

Lists

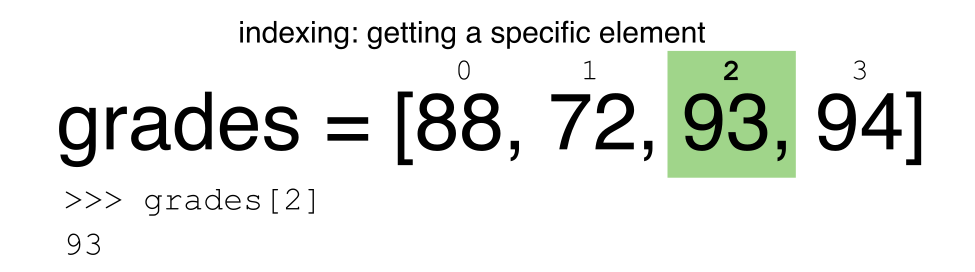

Lists are a common data structure to hold an ordered sequence of elements. Each element can be accessed by an index. Note that Python indexes start with 0 instead of 1:

OUTPUT

1A for loop can be used to access the elements in a list

or other Python data structure one at a time:

OUTPUT

1

2

3Indentation is very important in Python. Note that

the second line in the example above is indented. Just like three

chevrons >>> indicate an interactive prompt in

Python, the three dots ... are Python’s prompt for multiple

lines. This is Python’s way of marking a block of code. [Note: you do

not type >>> or ....]

To add elements to the end of a list, we can use the

append method. Methods are a way to interact with an object

(a list, for example). We can invoke a method using the dot

. followed by the method name and a list of arguments in

parentheses. Let’s look at an example using append:

OUTPUT

[1, 2, 3, 4]To find out what methods are available for an object, we can use the

built-in help command:

OUTPUT

help(numbers)

Help on list object:

class list(object)

| list() -> new empty list

| list(iterable) -> new list initialized from iterable's items

...Tuples

A tuple is similar to a list in that it’s an ordered

sequence of elements. However, tuples can not be changed once created

(they are “immutable”). Tuples are created by placing comma-separated

values inside parentheses ().

PYTHON

# Tuples use parentheses

a_tuple = (1, 2, 3)

another_tuple = ('blue', 'green', 'red')

# Note: lists use square brackets

a_list = [1, 2, 3]Tuples vs. Lists

- What happens when you execute

a_list[1] = 5? - What happens when you execute

a_tuple[2] = 5? - What does

type(a_tuple)tell you abouta_tuple? - What information does the built-in function

len()provide? Does it provide the same information on both tuples and lists? Does thehelp()function confirm this?

- What happens when you execute

a_list[1] = 5?

The second value in a_list is replaced with

5.

- What happens when you execute

a_tuple[2] = 5?

ERROR

TypeError: 'tuple' object does not support item assignmentAs a tuple is immutable, it does not support item assignment. Elements in a list can be altered individually.

- What does

type(a_tuple)tell you abouta_tuple?

OUTPUT

<class 'tuple'>The function tells you that the variable a_tuple is an

object of the class tuple.

- What information does the built-in function

len()provide? Does it provide the same information on both tuples and lists? Does thehelp()function confirm this?

OUTPUT

3OUTPUT

3len() tells us the length of an object. It works the

same for both lists and tuples, providing us with the number of entries

in each case.

OUTPUT

Help on built-in function len in module builtins:

len(obj, /)

Return the number of items in a container.Lists and tuples are both types of container i.e. objects that can contain multiple items, the key difference being that lists are mutable i.e. they can be modified after they have been created, while tuples are not: their value cannot be modified, only overwritten.

Dictionaries

A dictionary is a container that holds pairs of objects - keys and values.

OUTPUT

'first'Dictionaries work a lot like lists - except that you index them with keys. You can think about a key as a name or unique identifier for the value it corresponds to.

OUTPUT

'one'To add an item to the dictionary we assign a value to a new key:

OUTPUT

{'first': 'one', 'second': 'two', 'third': 'three'}Using for loops with dictionaries is a little more

complicated. We can do this in two ways:

OUTPUT

'first' -> one

'second' -> two

'third' -> threeor

OUTPUT

'first' -> one

'second' -> two

'third' -> threeChanging dictionaries

- First, print the value of the

revdictionary to the screen. - Reassign the value that corresponds to the key

secondso that it no longer reads “two” but instead2. - Print the value of

revto the screen again to see if the value has changed.

Functions

Defining a section of code as a function in Python

is done using the def keyword. For example a function that

takes two arguments and returns their sum can be defined as:

OUTPUT

42Key Points

- Python is an interpreted language which can be used interactively (executing one command at a time) or in scripting mode (executing a series of commands saved in file).

- One can assign a value to a variable in Python. Those variables can be of several types, such as string, integer, floating point and complex numbers.

- Lists and tuples are similar in that they are ordered lists of elements; they differ in that a tuple is immutable (cannot be changed).

- Dictionaries are data structures that provide mappings between keys and values.

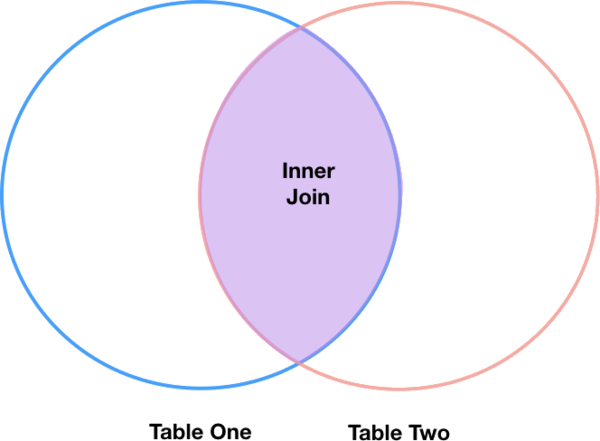

Content from Starting With Data

Last updated on 2024-07-11 | Edit this page

Overview

Questions

- How can I import data in Python?

- What is Pandas?

- Why should I use Pandas to work with data?

Objectives

- Navigate the workshop directory and download a dataset.

- Explain what a library is and what libraries are used for.

- Describe what the Python Data Analysis Library (Pandas) is.

- Load the Python Data Analysis Library (Pandas).

- Read tabular data into Python using Pandas.

- Describe what a DataFrame is in Python.

- Access and summarize data stored in a DataFrame.

- Define indexing as it relates to data structures.

- Perform basic mathematical operations and summary statistics on data in a Pandas DataFrame.

- Create simple plots.

Working With Pandas DataFrames in Python

We can automate the process of performing data manipulations in Python. It’s efficient to spend time building the code to perform these tasks because once it’s built, we can use it over and over on different datasets that use a similar format. This makes our data manipulation processes reproducible. We can also share our code with colleagues and they can replicate the same analysis starting with the same original data.

Starting in the same spot

To help the lesson run smoothly, let’s ensure everyone is in the same directory. This should help us avoid path and file name issues. At this time please navigate to the workshop directory. If you are working in Jupyter Notebook be sure that you start your notebook in the workshop directory.

Our Data

For this lesson, we will be using the Portal Teaching data, a subset of the data from Ernst et al. Long-term monitoring and experimental manipulation of a Chihuahuan Desert ecosystem near Portal, Arizona, USA.

We will be using files from the Portal

Project Teaching Database. This section will use the

surveys.csv file that can be downloaded here: https://ndownloader.figshare.com/files/2292172

We are studying the species and

weight of animals caught in sites in our study area.

The dataset is stored as a .csv file: each row holds

information for a single animal, and the columns represent:

| Column | Description |

|---|---|

| record_id | Unique id for the observation |

| month | month of observation |

| day | day of observation |

| year | year of observation |

| plot_id | ID of a particular site |

| species_id | 2-letter code |

| sex | sex of animal (“M”, “F”) |

| hindfoot_length | length of the hindfoot in mm |

| weight | weight of the animal in grams |

The first few rows of our first file look like this:

OUTPUT

record_id,month,day,year,plot_id,species_id,sex,hindfoot_length,weight

1,7,16,1977,2,NL,M,32,

2,7,16,1977,3,NL,M,33,

3,7,16,1977,2,DM,F,37,

4,7,16,1977,7,DM,M,36,

5,7,16,1977,3,DM,M,35,

6,7,16,1977,1,PF,M,14,

7,7,16,1977,2,PE,F,,

8,7,16,1977,1,DM,M,37,

9,7,16,1977,1,DM,F,34,About Libraries

A library in Python contains a set of tools (called functions) that perform tasks on our data. Importing a library is like getting a piece of lab equipment out of a storage locker and setting it up on the bench for use in a project. Once a library is set up, it can be used or called to perform the task(s) it was built to do.

Pandas in Python

One of the best options for working with tabular data in Python is to use the Python Data Analysis Library (a.k.a. Pandas). The Pandas library provides data structures, produces high quality plots with matplotlib and integrates nicely with other libraries that use NumPy (which is another Python library) arrays.

Python doesn’t load all of the libraries available to it by default.

We have to add an import statement to our code in order to

use library functions. To import a library, we use the syntax

import libraryName. If we want to give the library a

nickname to shorten the command, we can add

as nickNameHere. An example of importing the pandas library

using the common nickname pd is below.

Each time we call a function that’s in a library, we use the syntax

LibraryName.FunctionName. Adding the library name with a

. before the function name tells Python where to find the

function. In the example above, we have imported Pandas as

pd. This means we don’t have to type out

pandas each time we call a Pandas function.

Reading CSV Data Using Pandas

We will begin by locating and reading our survey data which are in

CSV format. CSV stands for Comma-Separated Values and is a common way to

store formatted data. Other symbols may also be used, so you might see

tab-separated, colon-separated or space separated files. pandas can work

with each of these types of separators, as it allows you to specify the

appropriate separator for your data. CSV files (and other -separated

value file types) make it easy to share data, and can be imported and

exported from many applications, including Microsoft Excel. For more

details on CSV files, see the Data

Organisation in Spreadsheets lesson. We can use Pandas’

read_csv function to pull the file directly into a DataFrame.

So What’s a DataFrame?

A DataFrame is a 2-dimensional data structure that can store data of

different types (including strings, numbers, categories and more) in

columns. It is similar to a spreadsheet or an SQL table or the

data.frame in R. A DataFrame always has an index (0-based).

An index refers to the position of an element in the data structure.

PYTHON

# Note that pd.read_csv is used because we imported pandas as pd

pd.read_csv("data/surveys.csv")Finding the path. Why am I getting an “FileNotFoundError” message?

After running the previous command, you may encounter a red error message that says “FileNotFoundError”, and at the very end of it says “[Errno 2] No such file or directory: ‘data/surveys.csv’”. This error occurs when an incorrect path to the file is specified.

Jupyter typically uses the location of your notebook file (.ipynb) as the working directory for reading and writing files. Let’s assume you organized your project folder as recommended in Episode 1, like the following tree shows:

python-workshop

└───code

│ └───ep2_starting_with_data.ipynb

└───data

│ └───surveys.csv

└───documentsIn this scenario, when I run

pd.read_csv("data/surveys.csv") in my

ep2_starting_with_data.ipynb notebook, Python will look for

the file in the directory

python-workshop/code/data/surveys.csv, because my working

directory is where my notebook is located (i.e. inside the

code folder).

To specify the correct path from my working directory, I need to add

“../” to the start of my path. Therefore, the correct command would be

pd.read_csv("../data/surveys.csv"). “..” is a special

directory name meaning “the directory containing this one”, or more

succinctly, the parent of the current directory. With the path as

"../data/surveys.csv", I am first going to the parent

directory, which is “python-workshop” in this case, after that to the

“data” folder, and after that to the “survey.csv” file.

For future reference, if you need to know your working directory, you can use the built-in Python module “os” and its function “getcwd()”, like the following block shows:

The above command yields the output below:

OUTPUT

record_id month day year plot_id species_id sex hindfoot_length weight

0 1 7 16 1977 2 NL M 32.0 NaN

1 2 7 16 1977 3 NL M 33.0 NaN

2 3 7 16 1977 2 DM F 37.0 NaN

3 4 7 16 1977 7 DM M 36.0 NaN

4 5 7 16 1977 3 DM M 35.0 NaN

... ... ... ... ... ... ... ... ... ...

35544 35545 12 31 2002 15 AH NaN NaN NaN

35545 35546 12 31 2002 15 AH NaN NaN NaN

35546 35547 12 31 2002 10 RM F 15.0 14.0

35547 35548 12 31 2002 7 DO M 36.0 51.0

35548 35549 12 31 2002 5 NaN NaN NaN NaN

[35549 rows x 9 columns]We can see that there were 35,549 rows parsed. Each row has 9

columns. The first column is the index of the DataFrame. The index is

used to identify the position of the data, but it is not an actual

column of the DataFrame. It looks like the read_csv

function in Pandas read our file properly. However, we haven’t saved any

data to memory so we can work with it. We need to assign the DataFrame

to a variable. Remember that a variable is a name for a value, such as

x, or data. We can create a new object with a

variable name by assigning a value to it using =.

Let’s call the imported survey data surveys_df:

Note that Python does not produce any output on the screen when you

assign the imported DataFrame to a variable. We can view the value of

the surveys_df object by typing its name into the Python

command prompt.

which prints contents like above.

Note: if the output is too wide to print on your narrow terminal window, you may see something slightly different as the large set of data scrolls past. You may see simply the last column of data:

OUTPUT

17 NaN

18 NaN

19 NaN

20 NaN

21 NaN

22 NaN

23 NaN

24 NaN

25 NaN

26 NaN

27 NaN

28 NaN

29 NaN

... ...

35519 36.0

35520 48.0

35521 45.0

35522 44.0

35523 27.0

35524 26.0

35525 24.0

35526 43.0

35527 NaN

35528 25.0

35529 NaN

35530 NaN

35531 43.0

35532 48.0

35533 56.0

35534 53.0

35535 42.0

35536 46.0

35537 31.0

35538 68.0

35539 23.0

35540 31.0

35541 29.0

35542 34.0

35543 NaN

35544 NaN

35545 NaN

35546 14.0

35547 51.0

35548 NaN

[35549 rows x 9 columns]Don’t worry: all the data is there! You can confirm this by scrolling

upwards, or by looking at the [# of rows x # of columns]

block at the end of the output.

You can also use surveys_df.head() to view only the

first few rows of the dataset in an output that is easier to fit in one

window. After doing this, you can see that pandas has neatly formatted

the data to fit our screen:

PYTHON

surveys_df.head() # The head() method displays the first several lines of a file. It

# is discussed below.OUTPUT

record_id month day year plot_id species_id sex hindfoot_length \

5 6 7 16 1977 1 PF M 14.0

6 7 7 16 1977 2 PE F NaN

7 8 7 16 1977 1 DM M 37.0

8 9 7 16 1977 1 DM F 34.0

9 10 7 16 1977 6 PF F 20.0

weight

5 NaN

6 NaN

7 NaN

8 NaN

9 NaNExploring Our Species Survey Data

A first good command to explore our dataset is the “info()” DataFrame method. It will show us useful information like: the number of rows (or entries) and columns in our dataset; and the name, number of non-null values and data type of each column.

OUTPUT

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 35549 entries, 0 to 35548

Data columns (total 9 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 record_id 35549 non-null int64

1 month 35549 non-null int64

2 day 35549 non-null int64

3 year 35549 non-null int64

4 plot_id 35549 non-null int64

5 species_id 34786 non-null object

6 sex 33038 non-null object

7 hindfoot_length 31438 non-null float64

8 weight 32283 non-null float64

dtypes: float64(2), int64(5), object(2)

memory usage: 2.4+ MBAgain, we can use the type function to see what kind of

thing surveys_df is:

OUTPUT

<class 'pandas.core.frame.DataFrame'>As expected, it’s a DataFrame (or, to use the full name that Python

uses to refer to it internally, a

pandas.core.frame.DataFrame).

What kind of things does surveys_df contain? DataFrames

have an attribute called dtypes that answers this:

OUTPUT

record_id int64

month int64

day int64

year int64

plot_id int64

species_id object

sex object

hindfoot_length float64

weight float64

dtype: objectAll the values in a single column have the same type. For example,

values in the month column have type int64, which is a kind

of integer. Cells in the month column cannot have fractional values, but

values in weight and hindfoot_length columns can, because they have type

float64. The object type doesn’t have a very

helpful name, but in this case it represents strings (such as ‘M’ and

‘F’ in the case of sex).

We’ll talk a bit more about what the different formats mean in a different lesson.

Useful Ways to View DataFrame Objects in Python

There are many ways to summarize and access the data stored in DataFrames, using attributes and methods provided by the DataFrame object.

Attributes are features of an object. For example, the

shape attribute will output the size (the number of rows

and columns) of an object. To access an attribute, use the DataFrame

object name followed by the attribute name

df_object.attribute. For example, using the DataFrame

surveys_df and attribute columns, an index of

all the column names in the DataFrame can be accessed with

surveys_df.columns.

Methods are like functions, but they only work on particular kinds of

objects. As an example, the head() method

works on DataFrames. Methods are called in a similar fashion to

attributes, using the syntax df_object.method(). Using

surveys_df.head() gets the first few rows in the DataFrame

surveys_df using the head() method. With a

method, we can supply extra information in the parentheses to control

behaviour.

Let’s look at the data using these.

Challenge - DataFrames

Using our DataFrame surveys_df, try out the

attributes & methods below to see

what they return.

surveys_df.columnssurveys_df.shapeTake note of the output ofshape- what format does it return the shape of the DataFrame in?

HINT: More on tuples, here.

surveys_df.head()Also, what doessurveys_df.head(15)do?surveys_df.tail()

-

surveys_df.columnsprovides the names of the columns in the DataFrame. -

surveys_df.shapeprovides the dimensions of the DataFrame as a tuple in(r,c)format, whereris the number of rows andcthe number of columns. -

surveys_df.head()returns the first 5 lines of the DataFrame, annotated with column and row labels. Adding an integer as an argument to the function specifies the number of lines to display from the top of the DataFrame, e.g.surveys_df.head(15)will return the first 15 lines. -

surveys_df.tail()will display the last 5 lines, and behaves similarly to thehead()method.

Calculating Statistics From Data In A Pandas DataFrame

We’ve read our data into Python. Next, let’s perform some quick summary statistics to learn more about the data that we’re working with. We might want to know how many animals were collected in each site, or how many of each species were caught. We can perform summary stats quickly using groups. But first we need to figure out what we want to group by.

Let’s begin by exploring our data:

which returns:

OUTPUT

Index(['record_id', 'month', 'day', 'year', 'plot_id', 'species_id', 'sex',

'hindfoot_length', 'weight'],

dtype='object')Let’s get a list of all the species. The pd.unique

function tells us all of the unique values in the

species_id column.

which returns:

OUTPUT

array(['NL', 'DM', 'PF', 'PE', 'DS', 'PP', 'SH', 'OT', 'DO', 'OX', 'SS',

'OL', 'RM', nan, 'SA', 'PM', 'AH', 'DX', 'AB', 'CB', 'CM', 'CQ',

'RF', 'PC', 'PG', 'PH', 'PU', 'CV', 'UR', 'UP', 'ZL', 'UL', 'CS',

'SC', 'BA', 'SF', 'RO', 'AS', 'SO', 'PI', 'ST', 'CU', 'SU', 'RX',

'PB', 'PL', 'PX', 'CT', 'US'], dtype=object)Challenge - Statistics

Create a list of unique site IDs (“plot_id”) found in the surveys data. Call it

site_names. How many unique sites are there in the data? How many unique species are in the data?What is the difference between

len(site_names)andsurveys_df['plot_id'].nunique()?

site_names = pd.unique(surveys_df["plot_id"])

- How many unique sites are in the data?

site_names.sizeorlen(site_names)provide the answer: 24 - How many unique species are in the data?

len(pd.unique(surveys_df["species_id"]))tells us there are 49 species

-

len(site_names)andsurveys_df['plot_id'].nunique()both provide the same output: they are alternative ways of getting the unique values. Thenuniquemethod combines the count and unique value extraction, and can help avoid the creation of intermediate variables likesite_names.

Groups in Pandas

We often want to calculate summary statistics grouped by subsets or attributes within fields of our data. For example, we might want to calculate the average weight of all individuals per site.

We can calculate basic statistics for all records in a single column using the syntax below:

gives output

PYTHON

count 32283.000000

mean 42.672428

std 36.631259

min 4.000000

25% 20.000000

50% 37.000000

75% 48.000000

max 280.000000

Name: weight, dtype: float64We can also extract one specific metric if we wish:

PYTHON

surveys_df['weight'].min()

surveys_df['weight'].max()

surveys_df['weight'].mean()

surveys_df['weight'].std()

surveys_df['weight'].count()But if we want to summarize by one or more variables, for example

sex, we can use Pandas’ .groupby method.

Once we’ve created a groupby DataFrame, we can quickly calculate summary

statistics by a group of our choice.

The pandas function describe will

return descriptive stats including: mean, median, max, min, std and

count for a particular column in the data. Pandas’ describe

function will only return summary values for columns containing numeric

data.

PYTHON

# Summary statistics for all numeric columns by sex

grouped_data.describe()

# Provide the mean for each numeric column by sex

grouped_data.mean(numeric_only=True)grouped_data.mean(numeric_only=True)

OUTPUT:

OUTPUT

record_id month day year plot_id \

sex

F 18036.412046 6.583047 16.007138 1990.644997 11.440854

M 17754.835601 6.392668 16.184286 1990.480401 11.098282

hindfoot_length weight

sex

F 28.836780 42.170555

M 29.709578 42.995379

The groupby command is powerful in that it allows us to

quickly generate summary stats.

Challenge - Summary Data

- How many recorded individuals are female

Fand how many maleM? - What happens when you group by two columns using the following syntax and then calculate mean values?

grouped_data2 = surveys_df.groupby(['plot_id', 'sex'])grouped_data2.mean(numeric_only=True)

- Summarize weight values for each site in your data. HINT: you can

use the following syntax to only create summary statistics for one

column in your data.

by_site['weight'].describe()

- The first column of output from

grouped_data.describe()(count) tells us that the data contains 15690 records for female individuals and 17348 records for male individuals.- Note that these two numbers do not sum to 35549, the total number of

rows we know to be in the

surveys_dfDataFrame. Why do you think some records were excluded from the grouping?

- Note that these two numbers do not sum to 35549, the total number of

rows we know to be in the

- Calling the

mean()method on data grouped by these two columns calculates and returns the mean value for each combination of plot and sex.- Note that the mean is not meaningful for some variables, e.g. day,

month, and year. You can specify particular columns and particular

summary statistics using the

agg()method (short for aggregate), e.g. to obtain the last survey year, median foot-length and mean weight for each plot/sex combination:

- Note that the mean is not meaningful for some variables, e.g. day,

month, and year. You can specify particular columns and particular

summary statistics using the

PYTHON

surveys_df.groupby(['plot_id', 'sex']).agg({"year": 'max',

"hindfoot_length": 'median',

"weight": 'mean'})surveys_df.groupby(['plot_id'])['weight'].describe()

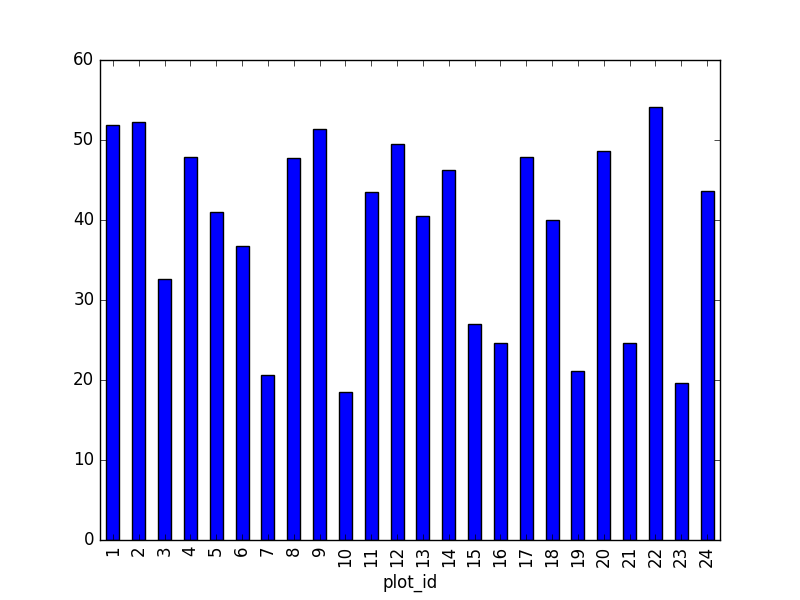

OUTPUT

count mean std min 25% 50% 75% max

plot_id

1 1903.0 51.822911 38.176670 4.0 30.0 44.0 53.0 231.0

2 2074.0 52.251688 46.503602 5.0 24.0 41.0 50.0 278.0

3 1710.0 32.654386 35.641630 4.0 14.0 23.0 36.0 250.0

4 1866.0 47.928189 32.886598 4.0 30.0 43.0 50.0 200.0

5 1092.0 40.947802 34.086616 5.0 21.0 37.0 48.0 248.0

6 1463.0 36.738893 30.648310 5.0 18.0 30.0 45.0 243.0

7 638.0 20.663009 21.315325 4.0 11.0 17.0 23.0 235.0

8 1781.0 47.758001 33.192194 5.0 26.0 44.0 51.0 178.0

9 1811.0 51.432358 33.724726 6.0 36.0 45.0 50.0 275.0

10 279.0 18.541219 20.290806 4.0 10.0 12.0 21.0 237.0

11 1793.0 43.451757 28.975514 5.0 26.0 42.0 48.0 212.0

12 2219.0 49.496169 41.630035 6.0 26.0 42.0 50.0 280.0

13 1371.0 40.445660 34.042767 5.0 20.5 33.0 45.0 241.0

14 1728.0 46.277199 27.570389 5.0 36.0 44.0 49.0 222.0

15 869.0 27.042578 35.178142 4.0 11.0 18.0 26.0 259.0

16 480.0 24.585417 17.682334 4.0 12.0 20.0 34.0 158.0

17 1893.0 47.889593 35.802399 4.0 27.0 42.0 50.0 216.0

18 1351.0 40.005922 38.480856 5.0 17.5 30.0 44.0 256.0

19 1084.0 21.105166 13.269840 4.0 11.0 19.0 27.0 139.0

20 1222.0 48.665303 50.111539 5.0 17.0 31.0 47.0 223.0

21 1029.0 24.627794 21.199819 4.0 10.0 22.0 31.0 190.0

22 1298.0 54.146379 38.743967 5.0 29.0 42.0 54.0 212.0

23 369.0 19.634146 18.382678 4.0 10.0 14.0 23.0 199.0

24 960.0 43.679167 45.936588 4.0 19.0 27.5 45.0 251.0Quickly Creating Summary Counts in Pandas

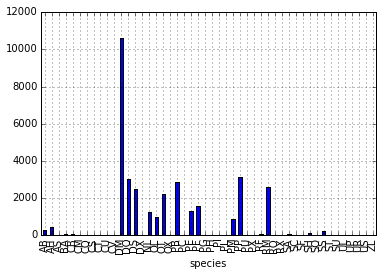

Let’s next count the number of samples for each species. We can do

this in a few ways, but we’ll use groupby combined with

a count() method.

PYTHON

# Count the number of samples by species

species_counts = surveys_df.groupby('species_id')['record_id'].count()

print(species_counts)Or, we can also count just the rows that have the species “DO”:

Challenge - Make a list

What’s another way to create a list of species and associated

count of the records in the data? Hint: you can perform

count, min, etc. functions on groupby

DataFrames in the same way you can perform them on regular

DataFrames.

As well as calling count() on the record_id

column of the grouped DataFrame as above, an equivalent result can be

obtained by extracting record_id from the result of

count() called directly on the grouped DataFrame:

OUTPUT

species_id

AB 303

AH 437

AS 2

BA 46

CB 50

CM 13

CQ 16

CS 1

CT 1

CU 1

CV 1

DM 10596

DO 3027

DS 2504

DX 40

NL 1252

OL 1006

OT 2249

OX 12

PB 2891

PC 39

PE 1299

PF 1597

PG 8

PH 32

PI 9

PL 36

PM 899

PP 3123

PU 5

PX 6

RF 75

RM 2609

RO 8

RX 2

SA 75

SC 1

SF 43

SH 147

SO 43

SS 248

ST 1

SU 5

UL 4

UP 8

UR 10

US 4

ZL 2Basic Math Functions

If we wanted to, we could apply a mathematical operation like addition or division on an entire column of our data. For example, let’s multiply all weight values by 2.

A more practical use of this might be to normalize the data according to a mean, area, or some other value calculated from our data.

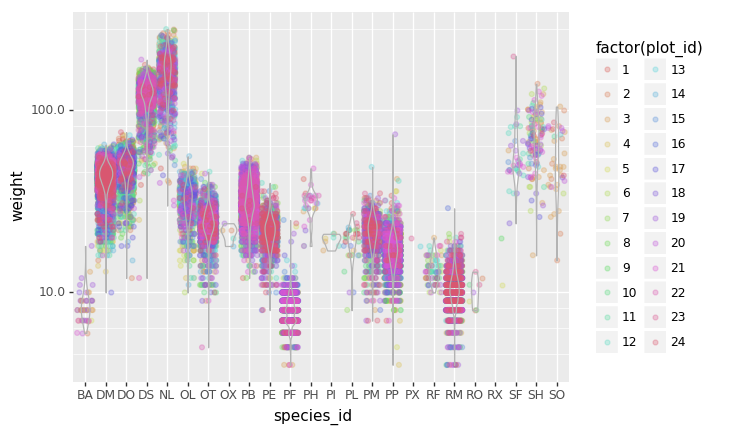

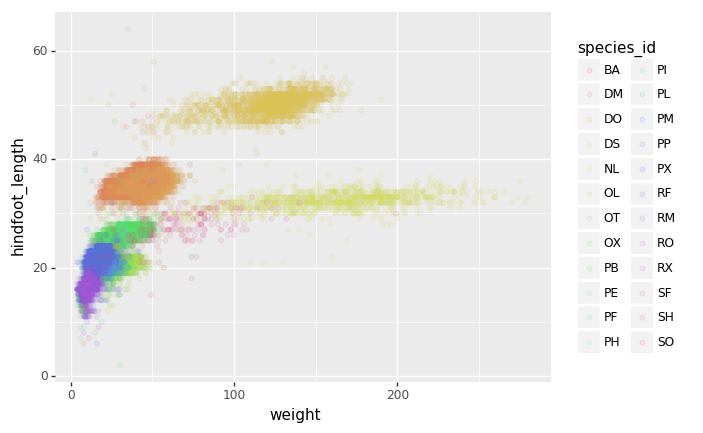

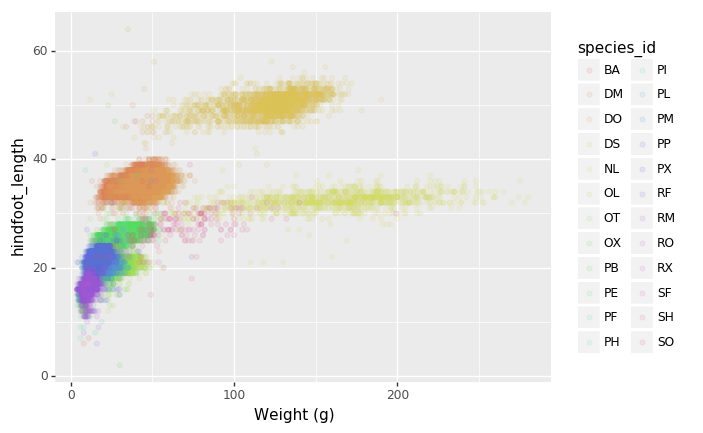

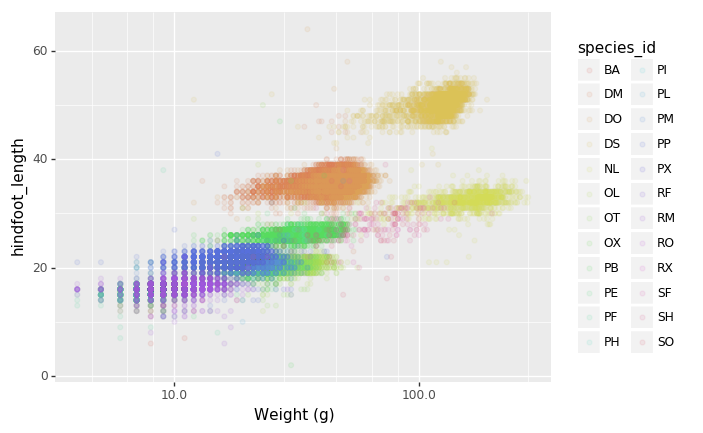

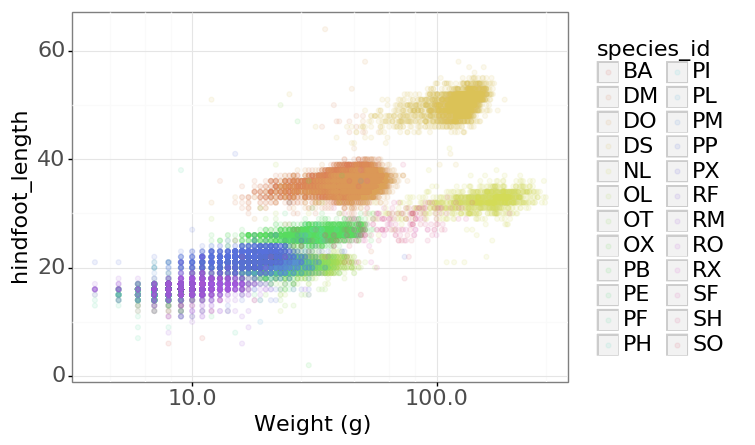

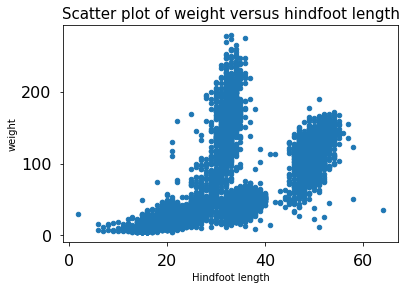

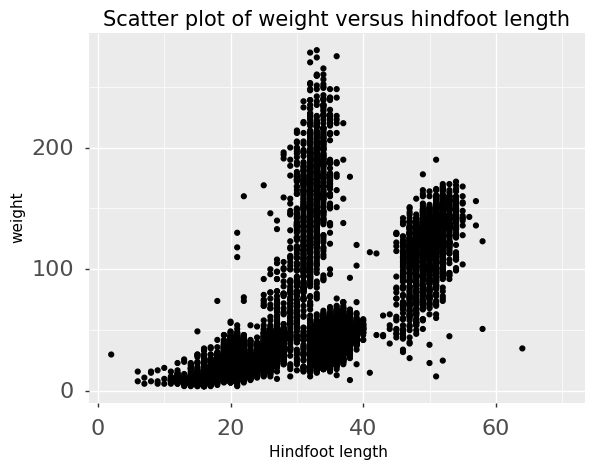

Quick & Easy Plotting Data Using Pandas

We can plot our summary stats using Pandas, too.

PYTHON

# Make sure figures appear inline in Ipython Notebook

%matplotlib inline

# Create a quick bar chart

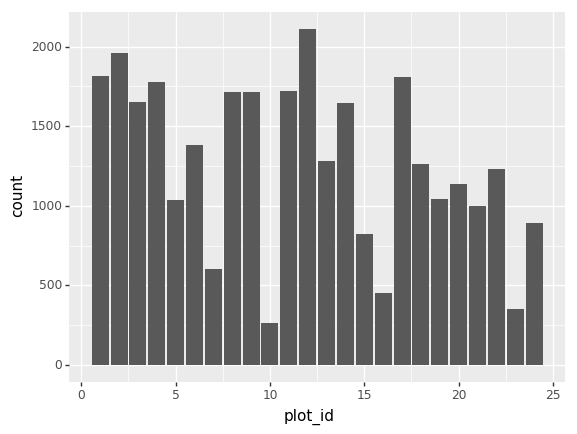

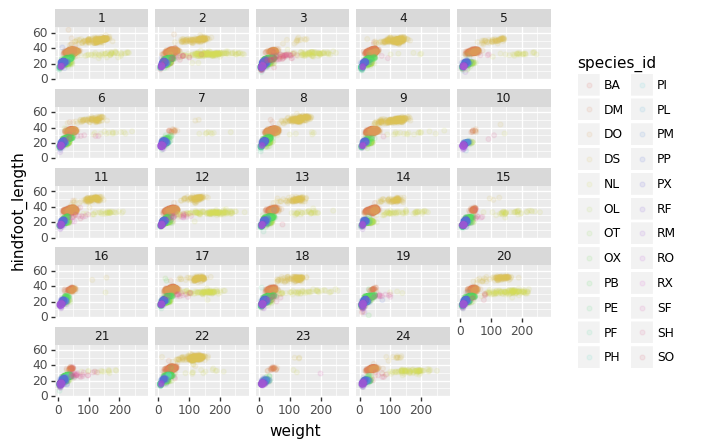

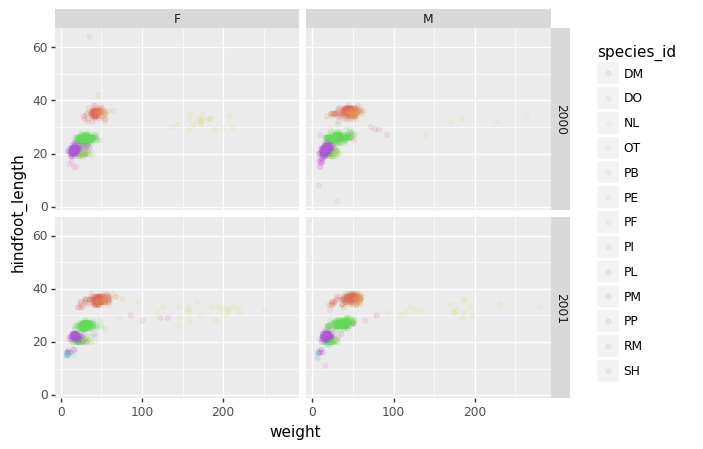

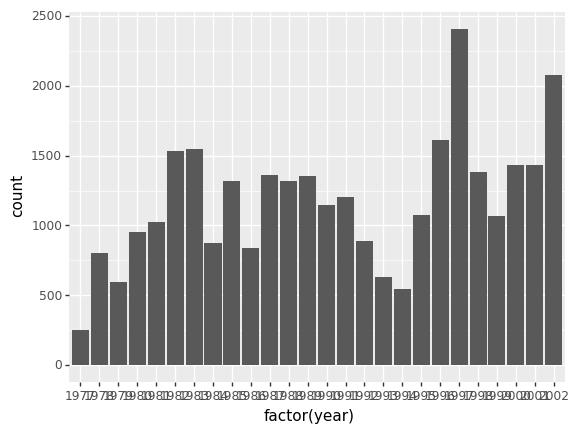

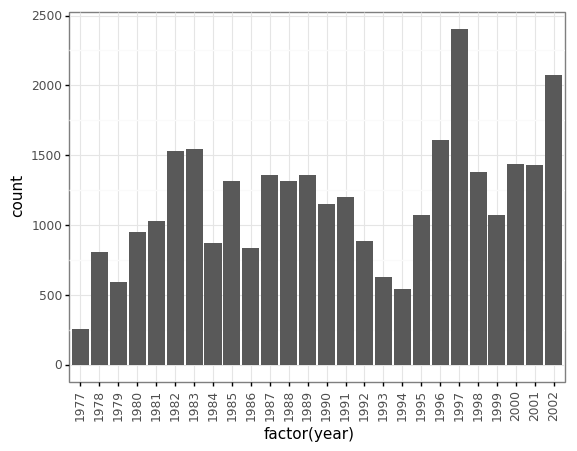

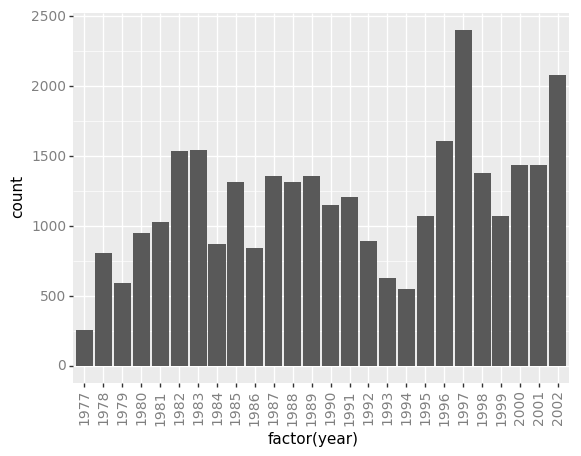

species_counts.plot(kind='bar'); Count per species site

Count per species site

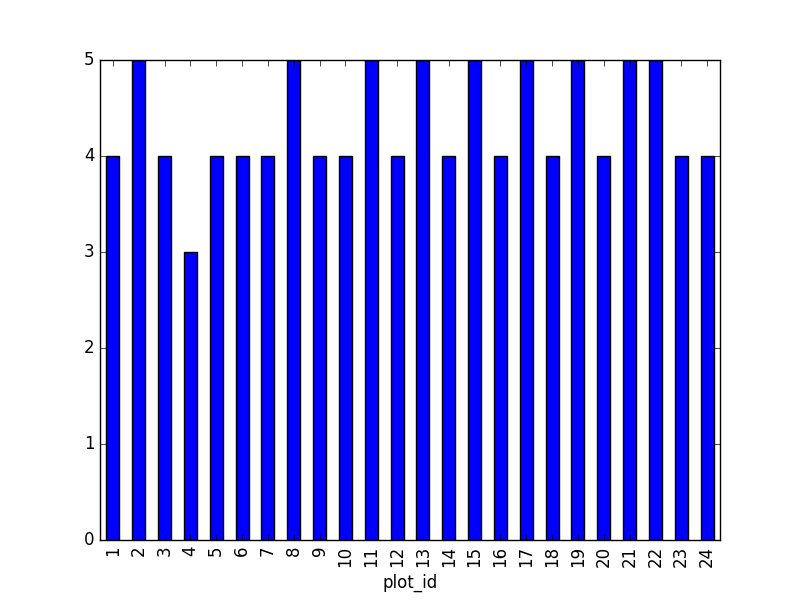

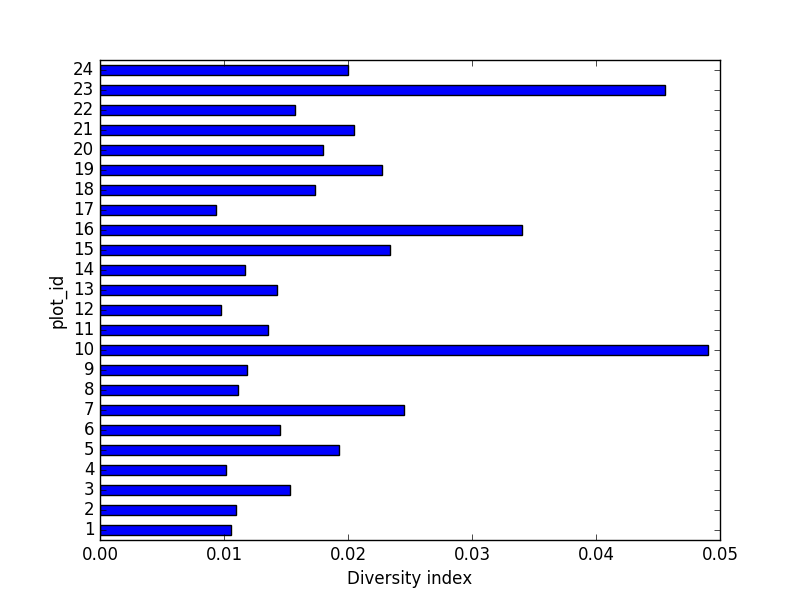

We can also look at how many animals were captured in each site:

PYTHON

total_count = surveys_df.groupby('plot_id')['record_id'].nunique()

# Let's plot that too

total_count.plot(kind='bar');Challenge - Plots

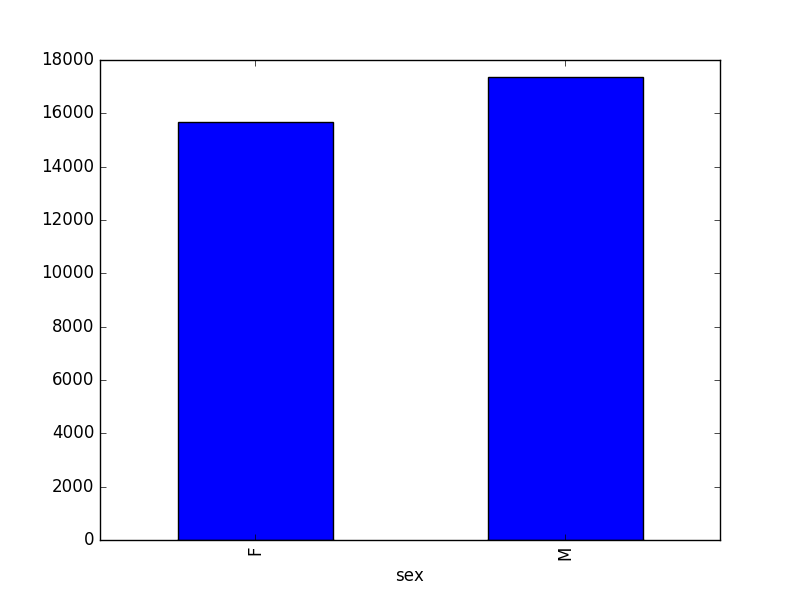

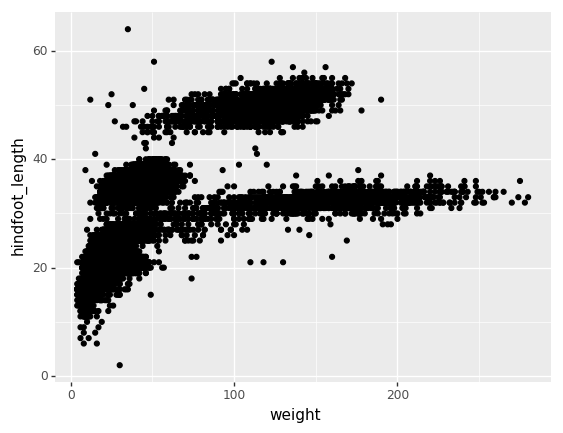

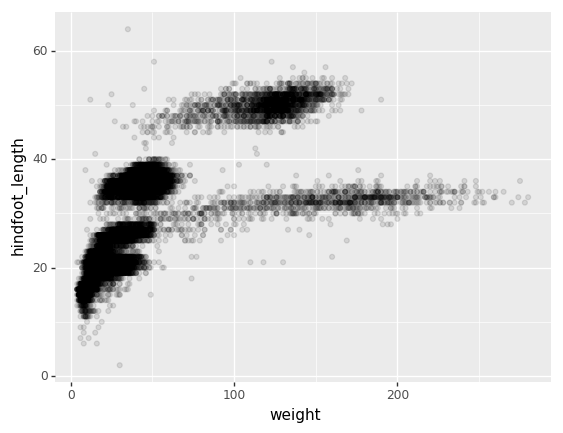

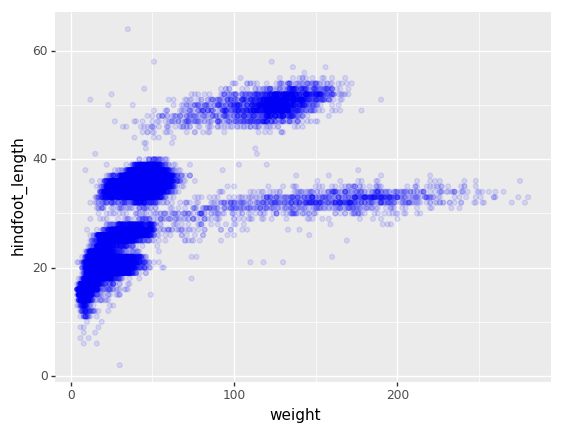

- Create a plot of average weight across all species per site.

- Create a plot of total males versus total females for the entire dataset.

surveys_df.groupby('plot_id').mean()["weight"].plot(kind='bar')

surveys_df.groupby('sex').count()["record_id"].plot(kind='bar')

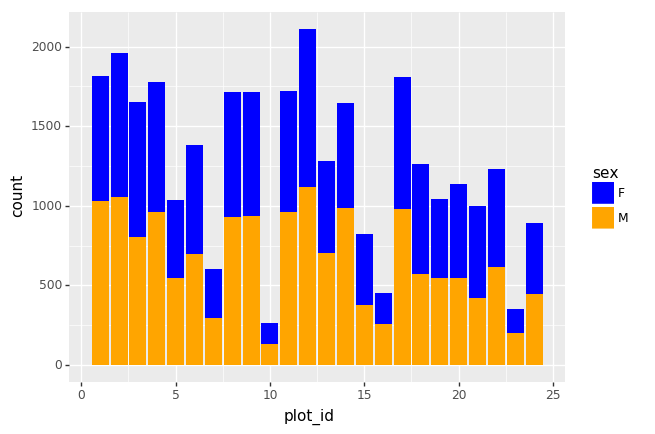

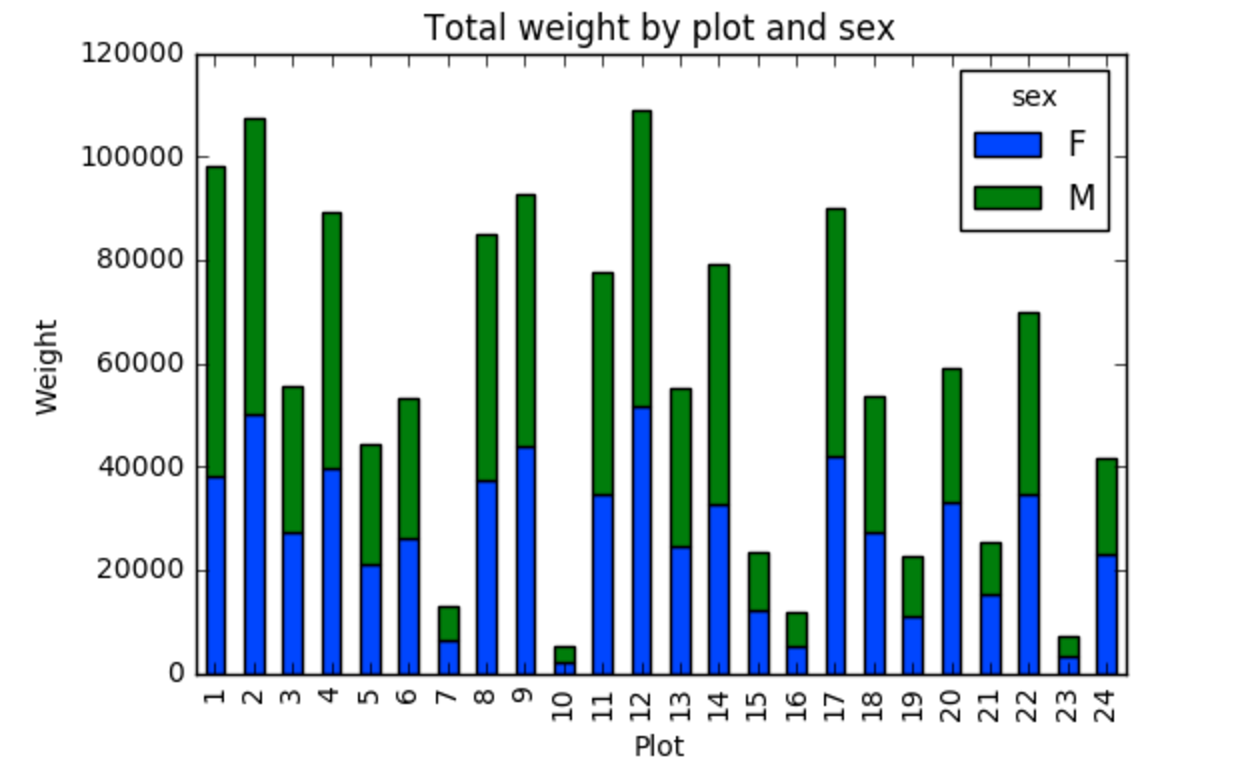

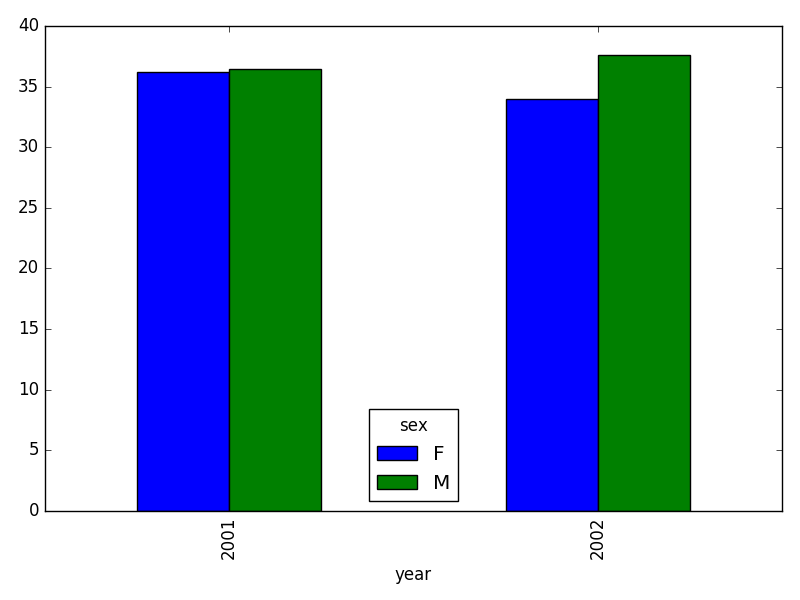

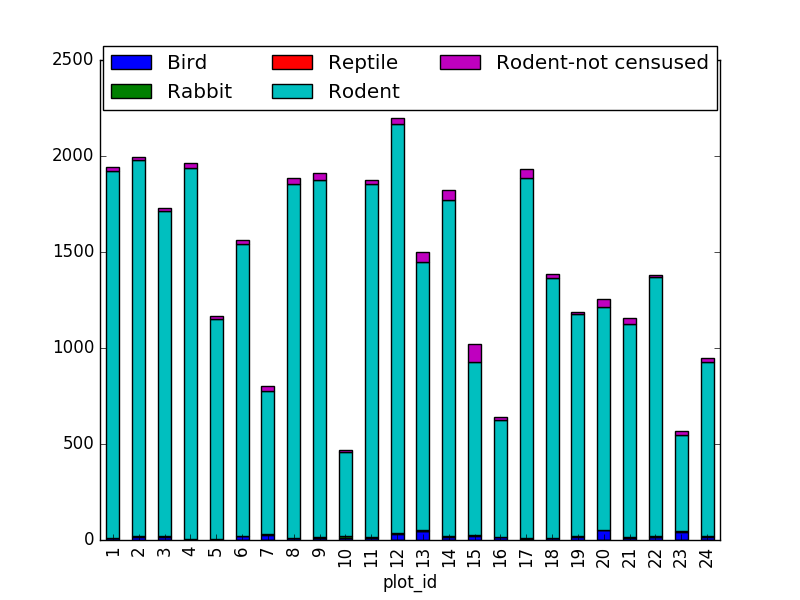

Summary Plotting Challenge

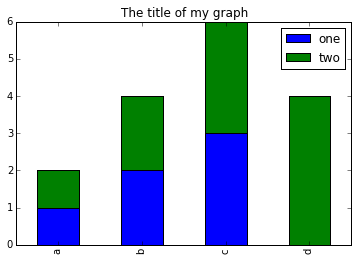

Create a stacked bar plot, with weight on the Y axis, and the stacked variable being sex. The plot should show total weight by sex for each site. Some tips are below to help you solve this challenge:

- For more information on pandas plots, see pandas’ documentation page on visualization.

- You can use the code that follows to create a stacked bar plot but the data to stack need to be in individual columns. Here’s a simple example with some data where ‘a’, ‘b’, and ‘c’ are the groups, and ‘one’ and ‘two’ are the subgroups.

PYTHON

d = {'one' : pd.Series([1., 2., 3.], index=['a', 'b', 'c']), 'two' : pd.Series([1., 2., 3., 4.], index=['a', 'b', 'c', 'd'])}

pd.DataFrame(d)shows the following data

OUTPUT

one two

a 1 1

b 2 2

c 3 3

d NaN 4We can plot the above with

PYTHON

# Plot stacked data so columns 'one' and 'two' are stacked

my_df = pd.DataFrame(d)

my_df.plot(kind='bar', stacked=True, title="The title of my graph")

- You can use the

.unstack()method to transform grouped data into columns for each plotting. Try running.unstack()on some DataFrames above and see what it yields.

Start by transforming the grouped data (by site and sex) into an unstacked layout, then create a stacked plot.

First we group data by site and by sex, and then calculate a total for each site.

PYTHON

by_site_sex = surveys_df.groupby(['plot_id', 'sex'])

site_sex_count = by_site_sex['weight'].sum()This calculates the sums of weights for each sex within each site as a table

OUTPUT

site sex

plot_id sex

1 F 38253

M 59979

2 F 50144

M 57250

3 F 27251

M 28253

4 F 39796

M 49377

<other sites removed for brevity>Below we’ll use .unstack() on our grouped data to figure

out the total weight that each sex contributed to each site.

PYTHON

by_site_sex = surveys_df.groupby(['plot_id', 'sex'])

site_sex_count = by_site_sex['weight'].sum()

site_sex_count.unstack()The unstack method above will display the following

output:

OUTPUT

sex F M

plot_id

1 38253 59979

2 50144 57250

3 27251 28253

4 39796 49377

<other sites removed for brevity>Now, create a stacked bar plot with that data where the weights for each sex are stacked by site.

Rather than display it as a table, we can plot the above data by stacking the values of each sex as follows:

PYTHON

by_site_sex = surveys_df.groupby(['plot_id', 'sex'])

site_sex_count = by_site_sex['weight'].sum()

spc = site_sex_count.unstack()

s_plot = spc.plot(kind='bar', stacked=True, title="Total weight by site and sex")

s_plot.set_ylabel("Weight")

s_plot.set_xlabel("Plot")

Key Points

- Libraries enable us to extend the functionality of Python.

- Pandas is a popular library for working with data.

- A Dataframe is a Pandas data structure that allows one to access data by column (name or index) or row.

- Aggregating data using the

groupby()function enables you to generate useful summaries of data quickly. - Plots can be created from DataFrames or subsets of data that have

been generated with

groupby().

Content from Indexing, Slicing and Subsetting DataFrames in Python

Last updated on 2024-02-26 | Edit this page

Overview

Questions

- How can I access specific data within my data set?

- How can Python and Pandas help me to analyse my data?

Objectives

- Describe what 0-based indexing is.

- Manipulate and extract data using column headings and index locations.

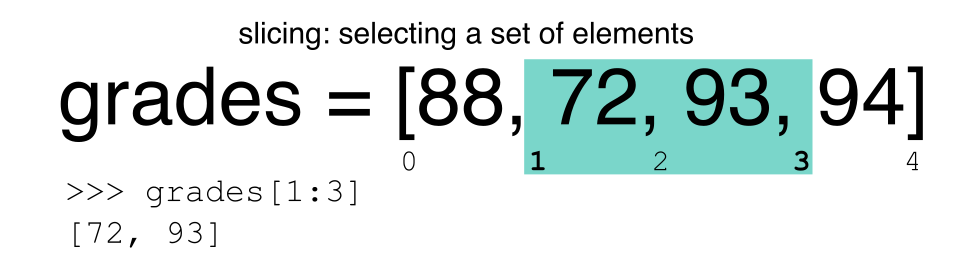

- Employ slicing to select sets of data from a DataFrame.

- Employ label and integer-based indexing to select ranges of data in a dataframe.

- Reassign values within subsets of a DataFrame.

- Create a copy of a DataFrame.

- Query / select a subset of data using a set of criteria using the

following operators:

==,!=,>,<,>=,<=. - Locate subsets of data using masks.

- Describe BOOLEAN objects in Python and manipulate data using BOOLEANs.

In the first episode of this lesson, we read a CSV file into a pandas’ DataFrame. We learned how to:

- save a DataFrame to a named object,

- perform basic math on data,

- calculate summary statistics, and

- create plots based on the data we loaded into pandas.

In this lesson, we will explore ways to access different parts of the data using:

- indexing,

- slicing, and

- subsetting.

Loading our data

We will continue to use the surveys dataset that we worked with in the last episode. Let’s reopen and read in the data again:

Indexing and Slicing in Python

We often want to work with subsets of a DataFrame object. There are different ways to accomplish this including: using labels (column headings), numeric ranges, or specific x,y index locations.

Selecting data using Labels (Column Headings)

We use square brackets [] to select a subset of a Python

object. For example, we can select all data from a column named

species_id from the surveys_df DataFrame by

name. There are two ways to do this:

PYTHON

# TIP: use the .head() method we saw earlier to make output shorter

# Method 1: select a 'subset' of the data using the column name

surveys_df['species_id']

# Method 2: use the column name as an 'attribute'; gives the same output

surveys_df.species_idWe can also create a new object that contains only the data within

the species_id column as follows:

PYTHON

# Creates an object, surveys_species, that only contains the `species_id` column

surveys_species = surveys_df['species_id']We can pass a list of column names too, as an index to select columns in that order. This is useful when we need to reorganize our data.

NOTE: If a column name is not contained in the DataFrame, an exception (error) will be raised.

PYTHON

# Select the species and plot columns from the DataFrame

surveys_df[['species_id', 'plot_id']]

# What happens when you flip the order?

surveys_df[['plot_id', 'species_id']]

# What happens if you ask for a column that doesn't exist?

surveys_df['speciess']Python tells us what type of error it is in the traceback, at the

bottom it says KeyError: 'speciess' which means that

speciess is not a valid column name (nor a valid key in the

related Python data type dictionary).

Reminder

The Python language and its modules (such as Pandas) define reserved

words that should not be used as identifiers when assigning objects and

variable names. Examples of reserved words in Python include Boolean

values True and False, operators

and, or, and not, among others.

The full list of reserved words for Python version 3 is provided at https://docs.python.org/3/reference/lexical_analysis.html#identifiers.

When naming objects and variables, it’s also important to avoid using

the names of built-in data structures and methods. For example, a

list is a built-in data type. It is possible to use the word

‘list’ as an identifier for a new object, for example

list = ['apples', 'oranges', 'bananas']. However, you would

then be unable to create an empty list using list() or

convert a tuple to a list using list(sometuple).

Extracting Range based Subsets: Slicing

Reminder

Python uses 0-based indexing.

Let’s remind ourselves that Python uses 0-based indexing. This means

that the first element in an object is located at position

0. This is different from other tools like R and Matlab

that index elements within objects starting at 1.

a[0]returns1, as Python starts with element 0 (this may be different from what you have previously experience with other languages e.g. MATLAB and R)a[5]raises anIndexErrorThe error is raised because the list

ahas no element with index 5: it has only five entries, indexed from 0 to 4.-

a[len(a)]also raises anIndexError.len(a)returns5, makinga[len(a)]equivalent toa[5]. To retreive the final element of a list, us the index-1, e.g.OUTPUT

5

Slicing Subsets of Rows in Python

Slicing using the [] operator selects a set of rows

and/or columns from a DataFrame. To slice out a set of rows, you use the

following syntax: data[start:stop]. When slicing in pandas

the start bound is included in the output. The stop bound is one step

BEYOND the row you want to select. So if you want to select rows 0, 1

and 2 your code would look like this:

The stop bound in Python is different from what you might be used to in languages like Matlab and R.

PYTHON

# Select the first 5 rows (rows 0, 1, 2, 3, 4)

surveys_df[:5]

# Select the last element in the list

# (the slice starts at the last element, and ends at the end of the list)

surveys_df[-1:]We can also reassign values within subsets of our DataFrame.

But before we do that, let’s look at the difference between the concept of copying objects and the concept of referencing objects in Python.

Copying Objects vs Referencing Objects in Python

Let’s start with an example:

PYTHON

# Using the 'copy() method'

true_copy_surveys_df = surveys_df.copy()

# Using the '=' operator

ref_surveys_df = surveys_dfYou might think that the code

ref_surveys_df = surveys_df creates a fresh distinct copy

of the surveys_df DataFrame object. However, using the

= operator in the simple statement y = x does

not create a copy of our DataFrame. Instead,

y = x creates a new variable y that references

the same object that x refers to. To state

this another way, there is only one object (the

DataFrame), and both x and y refer to it.

In contrast, the copy() method for a DataFrame creates a

true copy of the DataFrame.

Let’s look at what happens when we reassign the values within a subset of the DataFrame that references another DataFrame object:

PYTHON

# Assign the value `0` to the first three rows of data in the DataFrame

ref_surveys_df[0:3] = 0Let’s try the following code:

PYTHON

# ref_surveys_df was created using the '=' operator

ref_surveys_df.head()

# true_copy_surveys_df was created using the copy() function

true_copy_surveys_df.head()

# surveys_df is the original dataframe

surveys_df.head()What is the difference between these three dataframes?

When we assigned the first 3 rows the value of 0 using

the ref_surveys_df DataFrame, the surveys_df

DataFrame is modified too. Remember we created the reference

ref_surveys_df object above when we did

ref_surveys_df = surveys_df. Remember

surveys_df and ref_surveys_df refer to the

same exact DataFrame object. If either one changes the object, the other

will see the same changes to the reference object.

However - true_copy_surveys_df was created via the

copy() function. It retains the original values for the

first three rows.

To review and recap:

-

Copy uses the dataframe’s

copy()method -

A Reference is created using the

=operator

Okay, that’s enough of that. Let’s create a brand new clean dataframe from the original data CSV file.

Slicing Subsets of Rows and Columns in Python

We can select specific ranges of our data in both the row and column directions using either label or integer-based indexing.

ilocis primarily an integer based indexing counting from 0. That is, your specify rows and columns giving a number. Thus, the first row is row 0, the second column is column 1, etc.locis primarily a label based indexing where you can refer to rows and columns by their name. E.g., column ‘month’. Note that integers may be used, but they are interpreted as a label.

To select a subset of rows and columns from our

DataFrame, we can use the iloc method. For example, we can

select month, day and year (columns 2, 3 and 4 if we start counting at

1), like this:

which gives the output

OUTPUT

month day year

0 7 16 1977

1 7 16 1977

2 7 16 1977Notice that we asked for a slice from 0:3. This yielded 3 rows of data. When you ask for 0:3, you are actually telling Python to start at index 0 and select rows 0, 1, 2 up to but not including 3.

Let’s explore some other ways to index and select subsets of data:

PYTHON

# Select all columns for rows of index values 0 and 10

surveys_df.loc[[0, 10], :]

# What does this do?

surveys_df.loc[0, ['species_id', 'plot_id', 'weight']]

# What happens when you type the code below?

surveys_df.loc[[0, 10, 35549], :]NOTE: Labels must be found in the DataFrame or you

will get a KeyError.

Indexing by labels loc differs from indexing by integers

iloc. With loc, both the start bound and the

stop bound are inclusive. When using loc,

integers can be used, but the integers refer to the index label

and not the position. For example, using loc and select 1:4

will get a different result than using iloc to select rows

1:4.

We can also select a specific data value using a row and column

location within the DataFrame and iloc indexing:

In this iloc example,

gives the output

OUTPUT

'F'Remember that Python indexing begins at 0. So, the index location [2, 6] selects the element that is 3 rows down and 7 columns over in the DataFrame.

It is worth noting that rows are selected when using loc

with a single list of labels (or iloc with a single list of

integers). However, unlike loc or iloc,

indexing a data frame directly with labels will select columns (e.g.

surveys_df[['species_id', 'plot_id', 'weight']]), while

ranges of integers will select rows

(e.g. surveys\_df[0:13]). Direct indexing of rows is

redundant with using iloc, and will raise a

KeyError if a single integer or list is used; the error

will also occur if index labels are used without loc (or

column labels used with it). A useful rule of thumb is the following:

integer-based slicing is best done with iloc and will avoid

errors (and is generally consistent with indexing of Numpy arrays),

label-based slicing of rows is done with loc, and slicing

of columns by directly indexing column names.

Challenge - Range

- What happens when you execute:

surveys_df[0:1]surveys_df[0]surveys_df[:4]surveys_df[:-1]

- What happens when you call:

surveys_df.iloc[0:1]surveys_df.iloc[0]surveys_df.iloc[:4, :]surveys_df.iloc[0:4, 1:4]surveys_df.loc[0:4, 1:4]

- How are the last two commands different?

-

-

surveys_df[0:3]returns the first three rows of the DataFrame:

OUTPUT

record_id month day year plot_id species_id sex hindfoot_length weight 0 1 7 16 1977 2 NL M 32.0 NaN 1 2 7 16 1977 3 NL M 33.0 NaN 2 3 7 16 1977 2 DM F 37.0 NaN-

surveys_df[0]results in a ‘KeyError’, since direct indexing of a row is redundant withiloc. -

surveys_df[0:1]can be used to obtain only the first row. -

surveys_df[:5]slices from the first row to the fifth:

OUTPUT

record_id month day year plot_id species_id sex hindfoot_length weight 0 1 7 16 1977 2 NL M 32.0 NaN 1 2 7 16 1977 3 NL M 33.0 NaN 2 3 7 16 1977 2 DM F 37.0 NaN 3 4 7 16 1977 7 DM M 36.0 NaN 4 5 7 16 1977 3 DM M 35.0 NaN-

surveys_df[:-1]provides everything except the final row of the DataFrame. You can use negative index numbers to count backwards from the last entry.

-

-

surveys_df.iloc[0:1]returns the first row -

surveys_df.iloc[0]returns the first row as a named list -

surveys_df.iloc[:4, :]returns all columns of the first four rows -

surveys_df.iloc[0:4, 1:4]selects specified columns of the first four rows -

surveys_df.loc[0:4, 1:4]results in a ‘TypeError’ - see below.

-

- While

ilocuses integers as indices and slices accordingly,locworks with labels. It is like accessing values from a dictionary, asking for the key names. Column names 1:4 do not exist, so the call tolocabove results in an error. Check also the difference betweensurveys_df.loc[0:4]andsurveys_df.iloc[0:4].

Subsetting Data using Criteria

We can also select a subset of our data using criteria. For example, we can select all rows that have a year value of 2002:

Which produces the following output:

PYTHON

record_id month day year plot_id species_id sex hindfoot_length weight

33320 33321 1 12 2002 1 DM M 38 44

33321 33322 1 12 2002 1 DO M 37 58

33322 33323 1 12 2002 1 PB M 28 45

33323 33324 1 12 2002 1 AB NaN NaN NaN

33324 33325 1 12 2002 1 DO M 35 29

...

35544 35545 12 31 2002 15 AH NaN NaN NaN

35545 35546 12 31 2002 15 AH NaN NaN NaN

35546 35547 12 31 2002 10 RM F 15 14

35547 35548 12 31 2002 7 DO M 36 51

35548 35549 12 31 2002 5 NaN NaN NaN NaN

[2229 rows x 9 columns]Or we can select all rows that do not contain the year 2002:

We can define sets of criteria too:

Python Syntax Cheat Sheet

We can use the syntax below when querying data by criteria from a DataFrame. Experiment with selecting various subsets of the “surveys” data.

- Equals:

== - Not equals:

!= - Greater than:

> - Less than:

< - Greater than or equal to:

>= - Less than or equal to:

<=

Challenge - Queries

Select a subset of rows in the

surveys_dfDataFrame that contain data from the year 1999 and that contain weight values less than or equal to 8. How many rows did you end up with? What did your neighbor get?-

You can use the

isincommand in Python to query a DataFrame based upon a list of values as follows:Use the

isinfunction to find all plots that contain particular species in the “surveys” DataFrame. How many records contain these values? Experiment with other queries. Create a query that finds all rows with a weight value greater than or equal to 0.

The

~symbol in Python can be used to return the OPPOSITE of the selection that you specify. It is equivalent to is not in. Write a query that selects all rows with sex NOT equal to ‘M’ or ‘F’ in the “surveys” data.

-

OUTPUT

record_id month day year plot_id species_id sex hindfoot_length weight 29082 29083 1 16 1999 21 RM M 16.0 8.0 29196 29197 2 20 1999 18 RM M 18.0 8.0 29421 29422 3 15 1999 16 RM M 15.0 8.0 29903 29904 10 10 1999 4 PP M 20.0 7.0 29905 29906 10 10 1999 4 PP M 21.0 4.0If you are only interested in how many rows meet the criteria, the sum of

Truevalues could be used instead:OUTPUT

5 -

For example, using

PBandPL:OUTPUT

array([ 1, 10, 6, 24, 2, 23, 19, 12, 20, 22, 3, 9, 14, 13, 21, 7, 11, 15, 4, 16, 17, 8, 18, 5])OUTPUT

(24,) surveys_df[surveys_df["weight"] >= 0]-

OUTPUT

record_id month day year plot_id species_id sex hindfoot_length weight 13 14 7 16 1977 8 DM NaN NaN NaN 18 19 7 16 1977 4 PF NaN NaN NaN 33 34 7 17 1977 17 DM NaN NaN NaN 56 57 7 18 1977 22 DM NaN NaN NaN 76 77 8 19 1977 4 SS NaN NaN NaN ... ... ... ... ... ... ... ... ... ... 35527 35528 12 31 2002 13 US NaN NaN NaN 35543 35544 12 31 2002 15 US NaN NaN NaN 35544 35545 12 31 2002 15 AH NaN NaN NaN 35545 35546 12 31 2002 15 AH NaN NaN NaN 35548 35549 12 31 2002 5 NaN NaN NaN NaN [2511 rows x 9 columns]

Using masks to identify a specific condition

A mask can be useful to locate where a particular

subset of values exist or don’t exist - for example, NaN, or “Not a

Number” values. To understand masks, we also need to understand

BOOLEAN objects in Python.

Boolean values include True or False. For

example,

When we ask Python whether x is greater than 5, it

returns False. This is Python’s way to say “No”. Indeed,

the value of x is 5, and 5 is not greater than 5.

To create a boolean mask:

- Set the True / False criteria

(e.g.

values > 5 = True) - Python will then assess each value in the object to determine whether the value meets the criteria (True) or not (False).

- Python creates an output object that is the same shape as the

original object, but with a

TrueorFalsevalue for each index location.

Let’s try this out. Let’s identify all locations in the survey data

that have null (missing or NaN) data values. We can use the

isnull method to do this. The isnull method

will compare each cell with a null value. If an element has a null

value, it will be assigned a value of True in the output

object.

A snippet of the output is below:

PYTHON

record_id month day year plot_id species_id sex hindfoot_length weight

0 False False False False False False False False True

1 False False False False False False False False True

2 False False False False False False False False True

3 False False False False False False False False True

4 False False False False False False False False True

[35549 rows x 9 columns]To select the rows where there are null values, we can use the mask as an index to subset our data as follows:

PYTHON

# To select just the rows with NaN values, we can use the 'any()' method

surveys_df[pd.isnull(surveys_df).any(axis=1)]Note that the weight column of our DataFrame contains

many null or NaN values. We will explore ways

of dealing with this in the next episode on Data Types and Formats.

We can run isnull on a particular column too. What does

the code below do?

PYTHON

# What does this do?

empty_weights = surveys_df[pd.isnull(surveys_df['weight'])]['weight']

print(empty_weights)Let’s take a minute to look at the statement above. We are using the

Boolean object pd.isnull(surveys_df['weight']) as an index

to surveys_df. We are asking Python to select rows that

have a NaN value of weight.

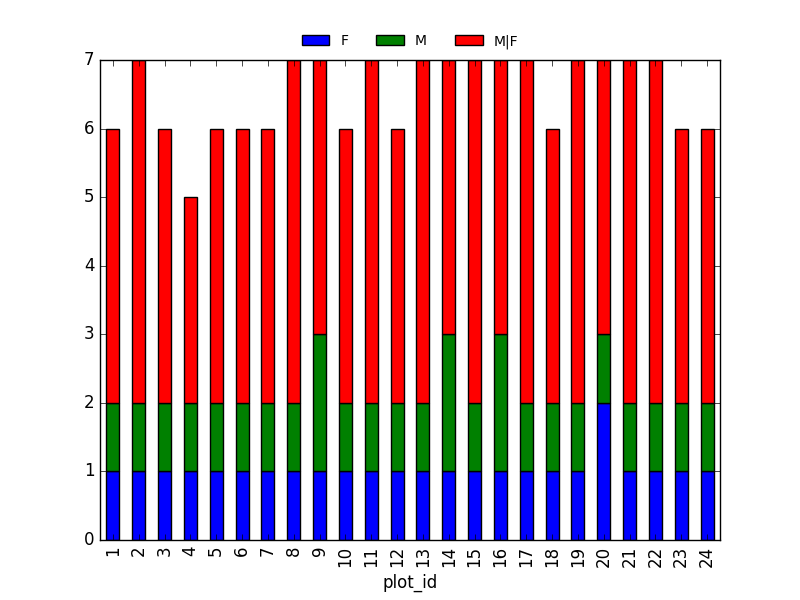

Challenge - Putting it all together

- Create a new DataFrame that only contains observations with sex values that are not female or male. Print the number of rows in this new DataFrame. Verify the result by comparing the number of rows in the new DataFrame with the number of rows in the surveys DataFrame where sex is null.

- Create a new DataFrame that contains only observations that are of sex male or female and where weight values are greater than 0. Create a stacked bar plot of average weight by plot with male vs female values stacked for each plot.

-

OUTPUT

2511OUTPUT

True PYTHON

# selection of the data with isin stack_selection = surveys_df[(surveys_df['sex'].isin(['M', 'F'])) & surveys_df["weight"] > 0.][["sex", "weight", "plot_id"]] # calculate the mean weight for each plot id and sex combination: stack_selection = stack_selection.groupby(["plot_id", "sex"]).mean().unstack() # and we can make a stacked bar plot from this: stack_selection.plot(kind='bar', stacked=True)

Key Points

- In Python, portions of data can be accessed using indices, slices, column headings, and condition-based subsetting.

- Python uses 0-based indexing, in which the first element in a list, tuple or any other data structure has an index of 0.

- Pandas enables common data exploration steps such as data indexing, slicing and conditional subsetting.

Content from Data Types and Formats

Last updated on 2024-07-15 | Edit this page

Overview

Questions

- What types of data can be contained in a DataFrame?

- Why is the data type important?

Objectives

- Describe how information is stored in a pandas DataFrame.

- Define the two main types of data in pandas: text and numerics.

- Examine the structure of a DataFrame.

- Modify the format of values in a DataFrame.

- Describe how data types impact operations.

- Define, manipulate, and interconvert integers and floats in Python/pandas.

- Analyze datasets having missing/null values (NaN values).

- Write manipulated data to a file.

The format of individual columns and rows will impact analysis performed on a dataset read into a pandas DataFrame. For example, you can’t perform mathematical calculations on a string (text formatted data). This might seem obvious, however sometimes numeric values are read into pandas as strings. In this situation, when you then try to perform calculations on the string-formatted numeric data, you get an error.

In this lesson we will review ways to explore and better understand the structure and format of our data.

Types of Data

How information is stored in a DataFrame or a Python object affects what we can do with it and the outputs of calculations as well. There are two main types of data that we will explore in this lesson: numeric and text data types.

Numeric Data Types

Numeric data types include integers and floats. A floating point (known as a float) number has decimal points even if that decimal point value is 0. For example: 1.13, 2.0, 1234.345. If we have a column that contains both integers and floating point numbers, pandas will assign the entire column to the float data type so the decimal points are not lost.

An integer will never have a decimal point. Thus if

we wanted to store 1.13 as an integer it would be stored as 1.

Similarly, 1234.345 would be stored as 1234. You will often see the data

type Int64 in pandas which stands for 64 bit integer. The

64 refers to the memory allocated to store data in each cell which

effectively relates to how many digits it can store in each “cell”.

Allocating space ahead of time allows computers to optimize storage and

processing efficiency.

Text Data Type

The text data type is known as a string in Python, or

object in pandas. Strings can contain numbers and / or

characters. For example, a string might be a word, a sentence, or

several sentences. A pandas object might also be a plot name like

'plot1'. A string can also contain or consist of numbers.

For instance, '1234' could be stored as a string, as could

'10.23'. However strings that contain numbers can

not be used for mathematical operations!

pandas and base Python use slightly different names for data types. More on this is in the table below:

| Pandas Type | Native Python Type | Description |

|---|---|---|

| object | string | The most general dtype. Will be assigned to your column if column has mixed types (numbers and strings). |

| int64 | int | Numeric characters. 64 refers to the memory allocated to hold this character. |

| float64 | float | Numeric characters with decimals. If a column contains numbers and NaNs (see below), pandas will default to float64, in case your missing value has a decimal. |

| bool | bool | True/False values |

| datetime64, timedelta[ns] | N/A (but see the datetime module in Python’s standard library) | Values meant to hold time data. Look into these for time series experiments. |

Checking the format of our data

Now that we’re armed with a basic understanding of numeric and text

data types, let’s explore the format of our survey data. We’ll be

working with the same surveys.csv dataset that we’ve used

in previous lessons.

PYTHON

# Make sure pandas is loaded

import pandas as pd

# Note that pd.read_csv is used because we imported pandas as pd

surveys_df = pd.read_csv("data/surveys.csv")Remember that we can check the type of an object like this:

OUTPUT

pandas.core.frame.DataFrameNext, let’s look at the structure of our surveys_df

data. In pandas, we can check the type of one column in a DataFrame

using the syntax dataframe_name['column_name'].dtype:

OUTPUT

dtype('O')A type ‘O’ just stands for “object” which in pandas is a string (text).

OUTPUT

dtype('int64')The type int64 tells us that pandas is storing each

value within this column as a 64 bit integer. We can use the

dataframe_name.dtypes command to view the data type for

each column in a DataFrame (all at once).

which returns:

PYTHON

record_id int64

month int64

day int64

year int64

plot_id int64

species_id object

sex object

hindfoot_length float64

weight float64

dtype: objectNote that most of the columns in our survey_df data are

of type int64. This means that they are 64 bit integers.

But the weight column is a floating point value which means

it contains decimals. The species_id and sex

columns are objects which means they contain strings.

Working With Integers and Floats

So we’ve learned that computers store numbers in one of two ways: as integers or as floating-point numbers (or floats). Integers are the numbers we usually count with. Floats have fractional parts (decimal places). Let’s next consider how the data type can impact mathematical operations on our data. Addition, subtraction, division and multiplication work on floats and integers as we’d expect.

OUTPUT

10OUTPUT

20If we divide one integer by another, we get a float. The result on Python 3 is different than in Python 2, where the result is an integer (integer division).

OUTPUT

0.5555555555555556OUTPUT

3.3333333333333335We can also convert a floating point number to an integer or an integer to floating point number. Notice that Python by default rounds down when it converts from floating point to integer.

OUTPUT

7OUTPUT

7.0Working With Our Survey Data

Getting back to our data, we can modify the format of values within

our data, if we want. For instance, we could convert the

record_id field to floating point values.

PYTHON

# Convert the record_id field from an integer to a float

surveys_df['record_id'] = surveys_df['record_id'].astype('float64')

surveys_df['record_id'].dtypeOUTPUT

dtype('float64')OUTPUT

0 2.0

1 3.0

2 2.0

3 7.0

4 3.0

...

35544 15.0

35545 15.0

35546 10.0

35547 7.0

35548 5.0ERROR

pandas.errors.IntCastingNaNError: Cannot convert non-finite values (NA or inf) to integerPandas cannot convert types from float to int if the column contains NaN values.

Missing Data Values - NaN

What happened in the last challenge activity? Notice that this raises

a casting error:

pandas.errors.IntCastingNaNError: Cannot convert non-finite values (NA or inf) to integer

(in older versions of pandas, this may be called a

ValueError instead). If we look at the weight

column in the surveys data we notice that there are NaN

(Not a Number)

values. NaN values are undefined values that cannot be

represented mathematically. pandas, for example, will read an empty cell

in a CSV or Excel sheet as NaN. NaNs have some desirable

properties: if we were to average the weight column without

replacing our NaNs, Python would know to skip over those cells.

OUTPUT